The shortage of DRAM and NAND chips caused by demands of AI data centers is likely to last into 2027, making every server more expensive.

Max Smolaks

Max is a Research Analyst at Uptime Institute Intelligence. Mr Smolaks’ expertise spans digital infrastructure management software, power and cooling equipment, and regulations and standards. He has 10 years’ experience as a technology journalist, reporting on innovation in IT and data center infrastructure.

msmolaks@uptimeinstitute.com

Latest Research

In this webinar and report, Uptime Intelligence looks beyond the more obvious trends of 2026 and examines some of the latest developments and challenges shaping the industry. AI is transforming data center strategies, but its impact remains uneven…

Uptime Intelligence looks beyond the more obvious trends of 2026 and examines some of the latest developments and challenges shaping the data center industry.

Nvidia’s DGX-Ready certification for colocation facilities has been around for nearly six years, yet what the program actually entails remains obscure.

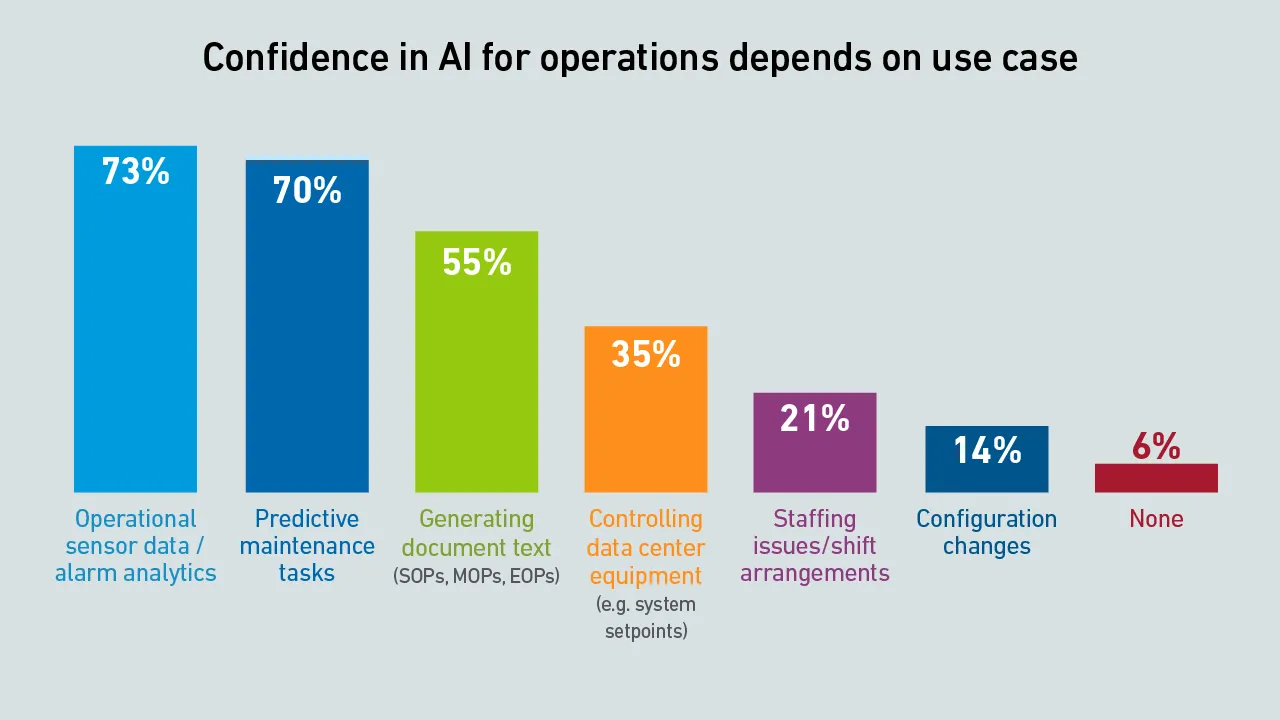

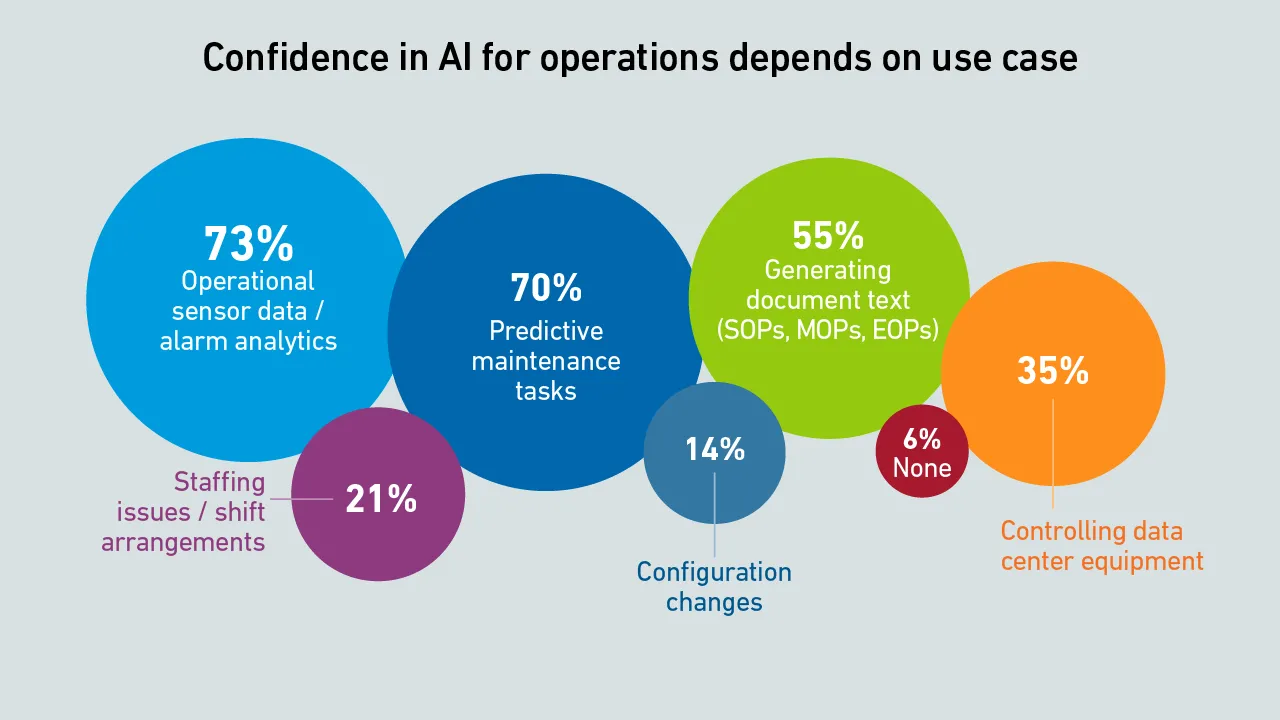

There is an expectation that AI will be useful in data center operations. For this to happen, software vendors need to deliver new products and use cases - and these are starting to appear more often.

Research into neuromorphic computing could lead to the creation of smaller, faster and more energy-efficient AI accelerators. This would have a transformative impact on digital infrastructure.

From on-prem AI to high-density IT, this webinar examined survey findings on how operators are preparing for what's next.

Most operators do not trust AI-based systems to control equipment in the data center - this has implications for software products that are already available, as well as those in development.

Several operators originally established to mine cryptocurrencies are now building hyperscale data centers for AI. How did this change happen?

The Uptime Institute Global Data Center Survey, now in its 15th year, is the most comprehensive and longest-running study of its kind. The findings in this report highlight the practices and experiences of data center owners and operators in the…

The 15th edition of the Uptime Institute Global Data Center Survey highlights the experiences and strategies of data center owners and operators in the areas of resiliency, sustainability, efficiency, staffing, cloud and AI.

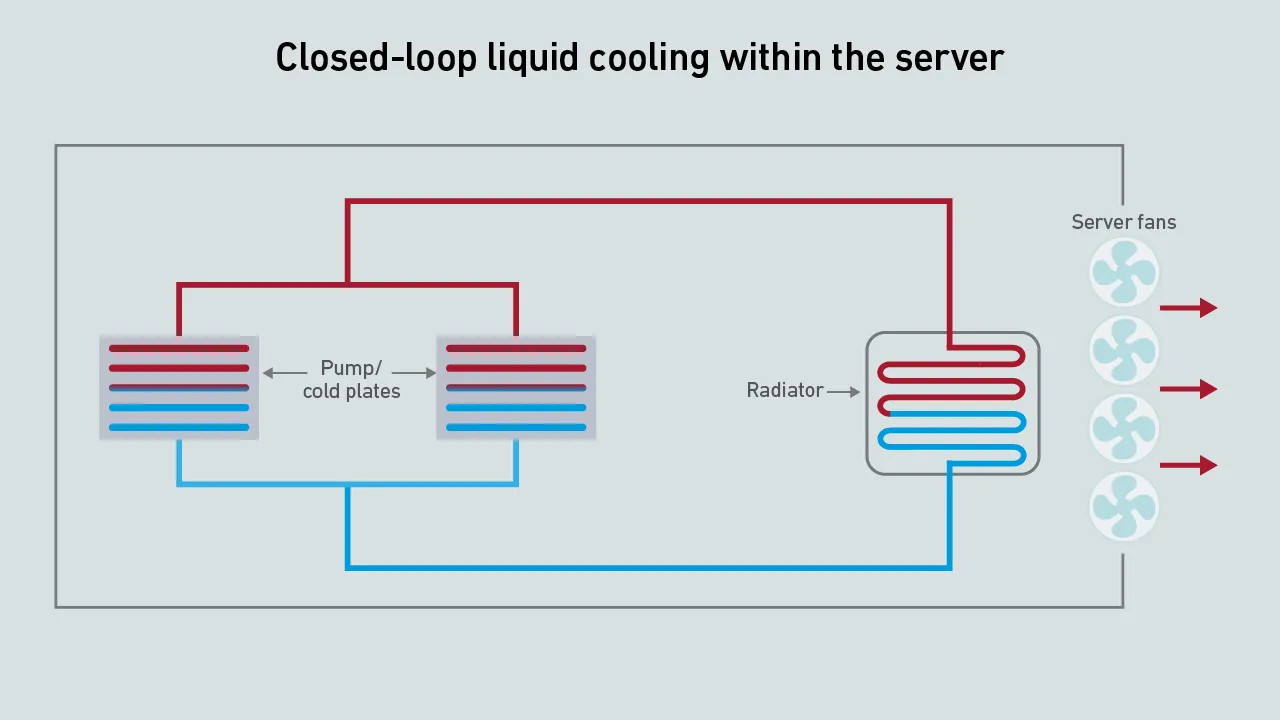

Liquid cooling contained within the server chassis lets operators cool high-density hardware without modifying existing infrastructure. However, this type of cooling has limitations in terms of performance and energy efficiency.

Uptime experts discuss and answer questions on grid demands and sustainability strategies while debating how to meet decarbonization goals.

Join Uptime experts as they discuss and answer questions on grid demands and sustainability strategies while debating how to meet decarbonization goals. This is a member and subscriber-only event.

Today, GPU designers pursue outright performance over power efficiency. This is a challenge for inference workloads that prize efficient token generation. GPU power management features can help, but require more attention.