As AI adoption spreads, most data centers will not host large training clusters — but many will need to operate specialized systems to run inferencing close to applications.

Dr. Owen Rogers

Dr. Owen Rogers is Uptime Institute’s Senior Research Director of Cloud Computing. Dr. Rogers has been analyzing the economics of cloud for over a decade as a chartered engineer, product manager and industry analyst. Rogers covers all areas of cloud, including AI, FinOps, sustainability, hybrid infrastructure and quantum computing.

orogers@uptimeinstitute.com

Latest Research

In 2026, enterprises will be more realistic about their use of generative AI, prioritizing simple use cases that deliver clear, timely value over those more innovative projects where returns — and successful outcomes — are less assured.

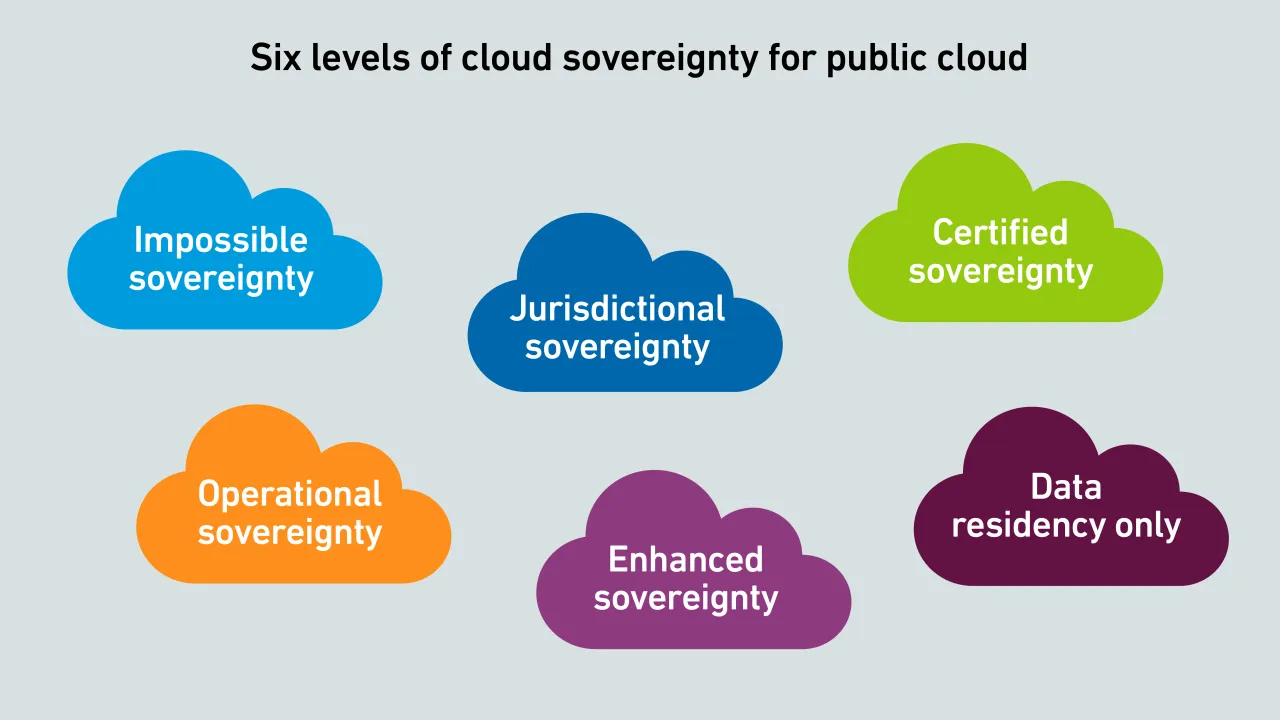

Cloud sovereignty is often treated as binary choice, but, in reality, it is a spectrum shaped by law, operations, technologies and supply chains. This framework explains the differences between sovereign public cloud options.

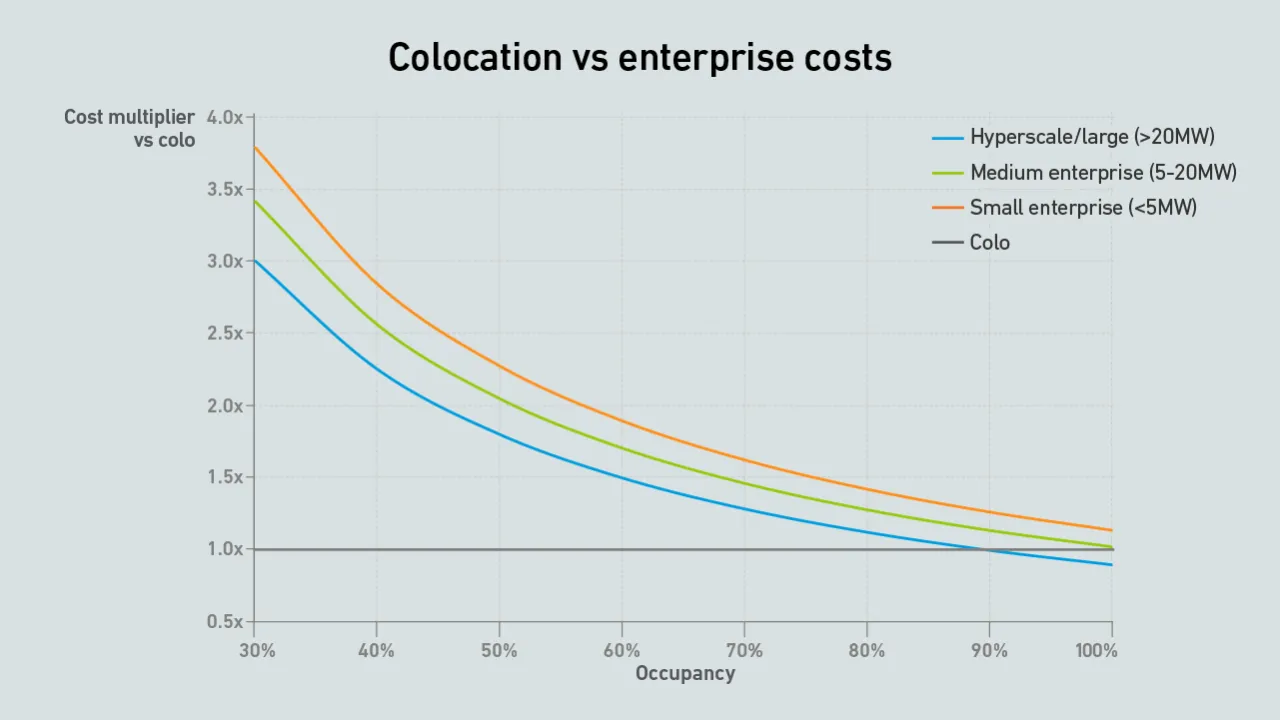

Simple arithmetic shows that newly constructed, large-scale private data centers with high occupancy rates can sometimes undercut colos on unit costs, but in most cases colos remain significantly cheaper.

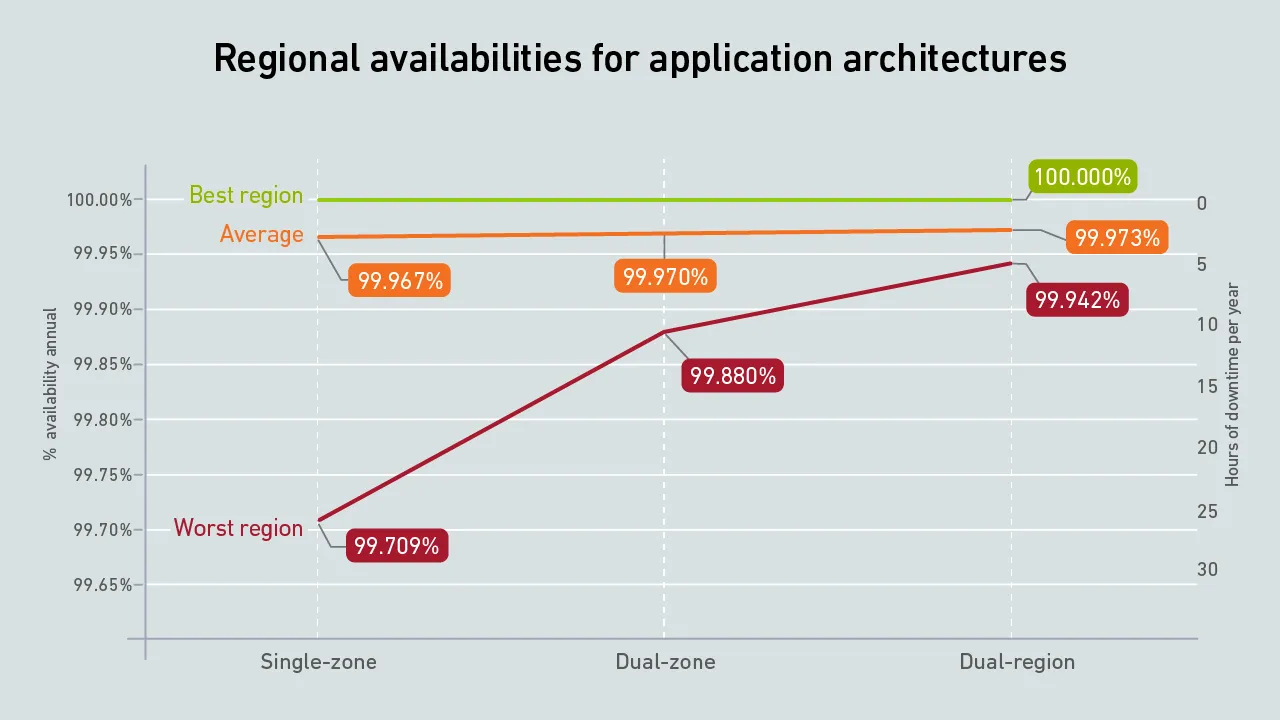

The Azure outage highlights a blind spot in resiliency planning. It is not only cloud compute that can fail - shared global network services such as DNS and CDNs can disrupt access to systems anywhere, including on-premises.

Financial institutions are embracing public cloud for some mission-critical workloads, and using it as a launchpad for AI development.

A major outage at AWS's Virginia region took many global organizations offline. What can enterprises do to reduce or negate the impact of such widespread outages?

The US Cloud Act lets US authorities access cloud data stored overseas. Encryption offers protection only when keys are firmly controlled - creating challenges for enterprises but an opportunity for colocation providers.

By raising debt, building data centers and using colos, neoclouds shield hyperscalers from the financial and technological shocks of the AI boom. They share in the upside if demand grows, but are burdened with stranded assets if it stalls.

The remote nature of cloud computing makes it vulnerable to extraterritorial legal reach. Colocations, by contrast, only manage infrastructure - not data - shielding them from the same level of foreign governmental access.

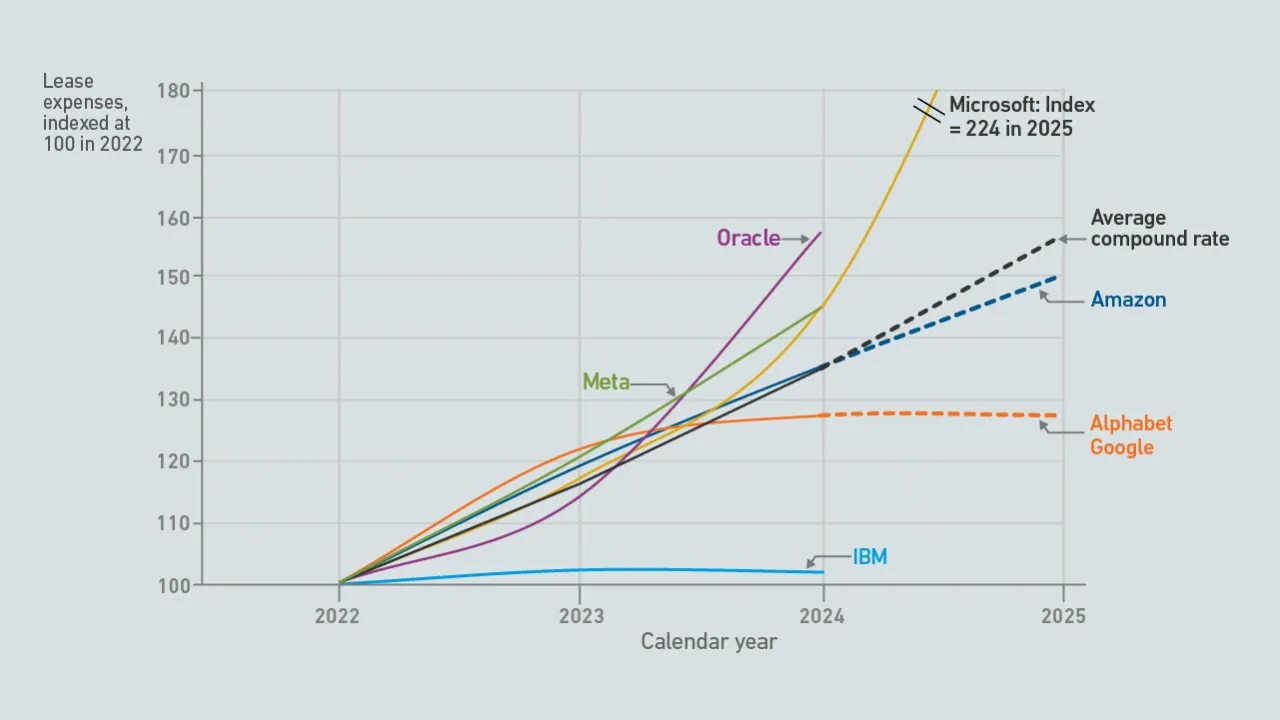

Financial data suggests that hyperscalers' use of colocation facilities has grown substantially over the past few years. Their investments in colocations also show no signs of slowing down.

AWS has recently cut prices on a range of GPU-backed instances. These price reductions make it harder to justify an investment in dedicated AI infrastructure.

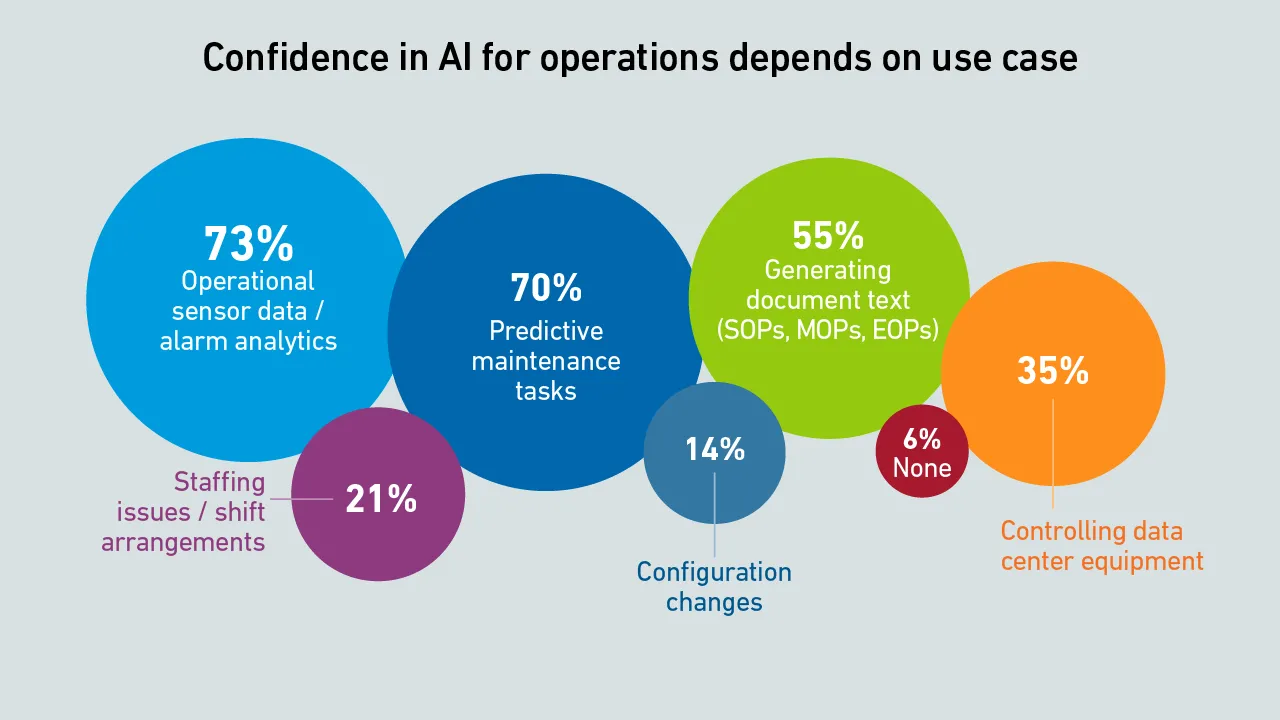

The 15th edition of the Uptime Institute Global Data Center Survey highlights the experiences and strategies of data center owners and operators in the areas of resiliency, sustainability, efficiency, staffing, cloud and AI.

The terms "retail" and "wholesale" colocation not only describe different types of colocation customers, but also how providers price and package their products, and the extent to which customers can specify their requirements.

Serverless container services enable rapid, per-second scalability, which is ideal for AI inference. However, inconsistent and opaque pricing metrics hinder comparisons. This pricing tool compares the cost of services across providers.