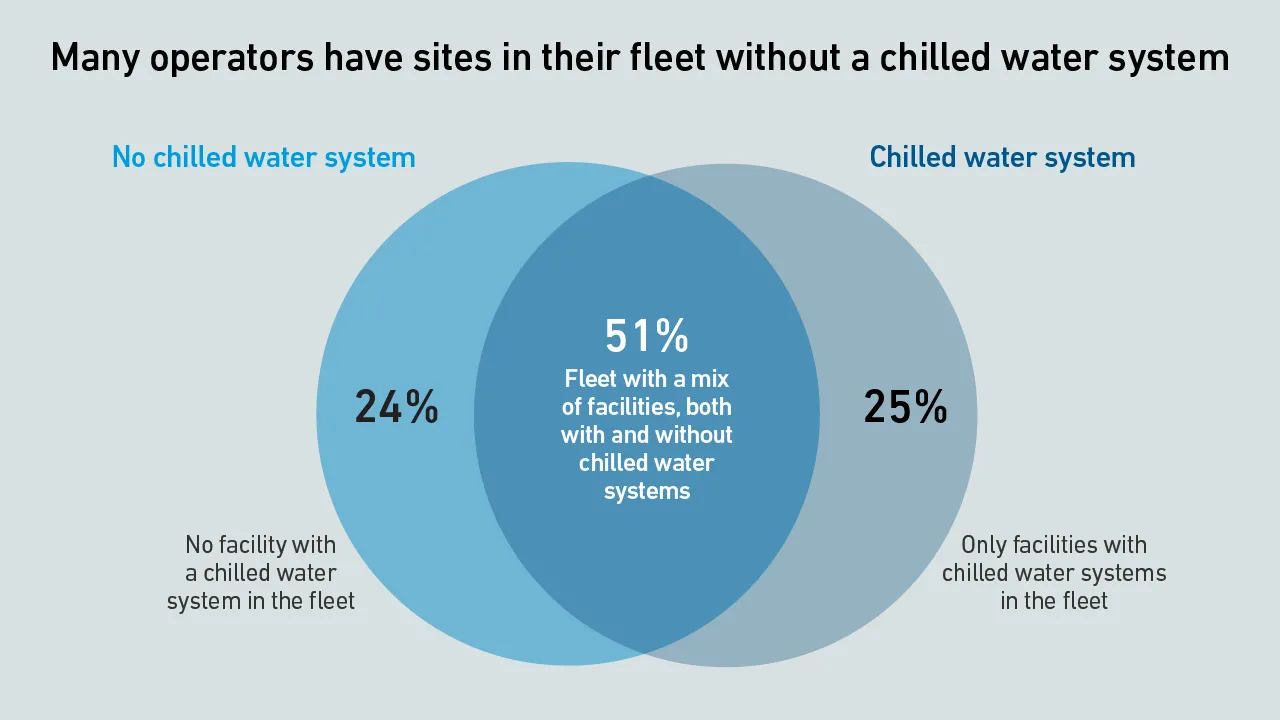

Nvidia CEO Jensen Huang’s comment that liquid-cooled AI racks will need no chillers created some turbulence — however, the concept of a chiller-free data center is an old one and is unlikely to suit most operators.

filters

Explore All Topics

DLC was developed to handle high heat loads from densified IT. True mainstream DLC adoption remains elusive; it still awaits design refinements to address outstanding operational issues for mission-critical applications.

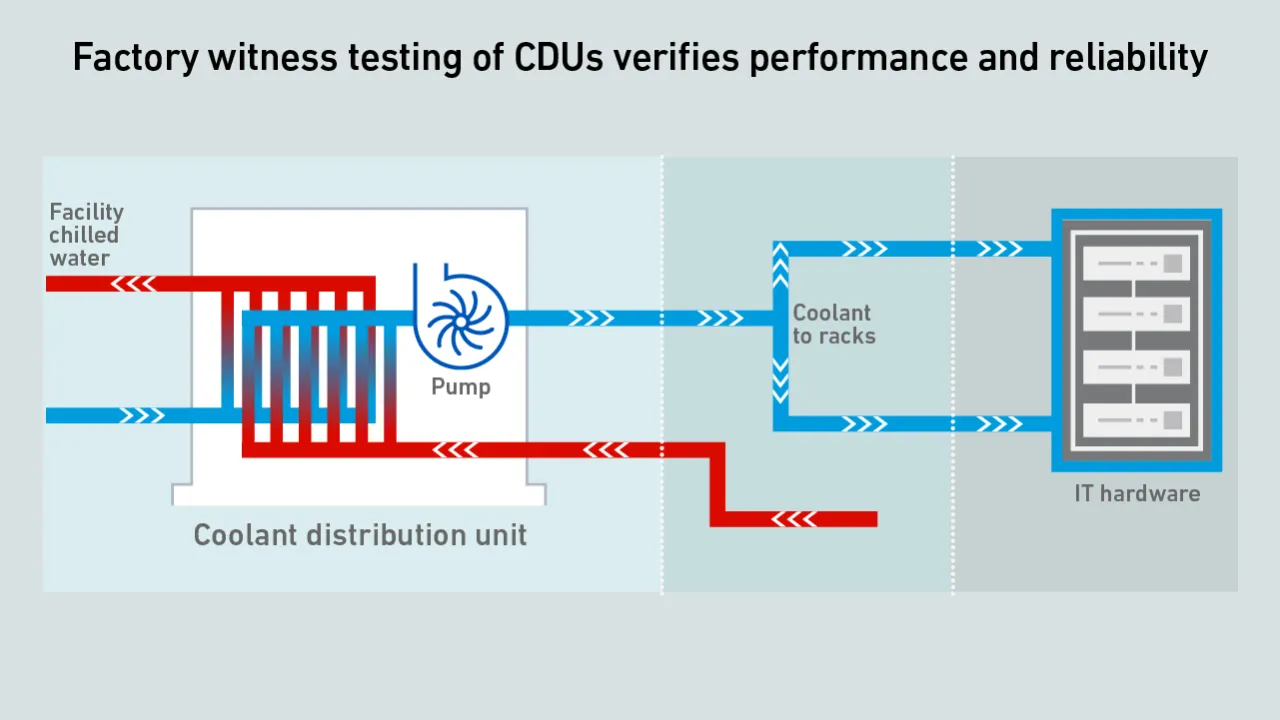

DLC introduces challenges at all levels of data center commissioning. Some end users accept CDUs without factory witness testing — a significant departure from the conventional commissioning script

Uptime Intelligence looks beyond the more obvious trends of 2026 and examines some of the latest developments and challenges shaping the data center industry.

Data4 needed to test how to build and commission liquid-cooled high-capacity racks before offering them to customers. The operator used a proof-of-concept test to develop an industrialized version, which is now in commercial operation.

A bout of consolidation and investment activity in cooling systems in the past 12 months reflects widespread expectation of a continued spending surge on data center infrastructure.

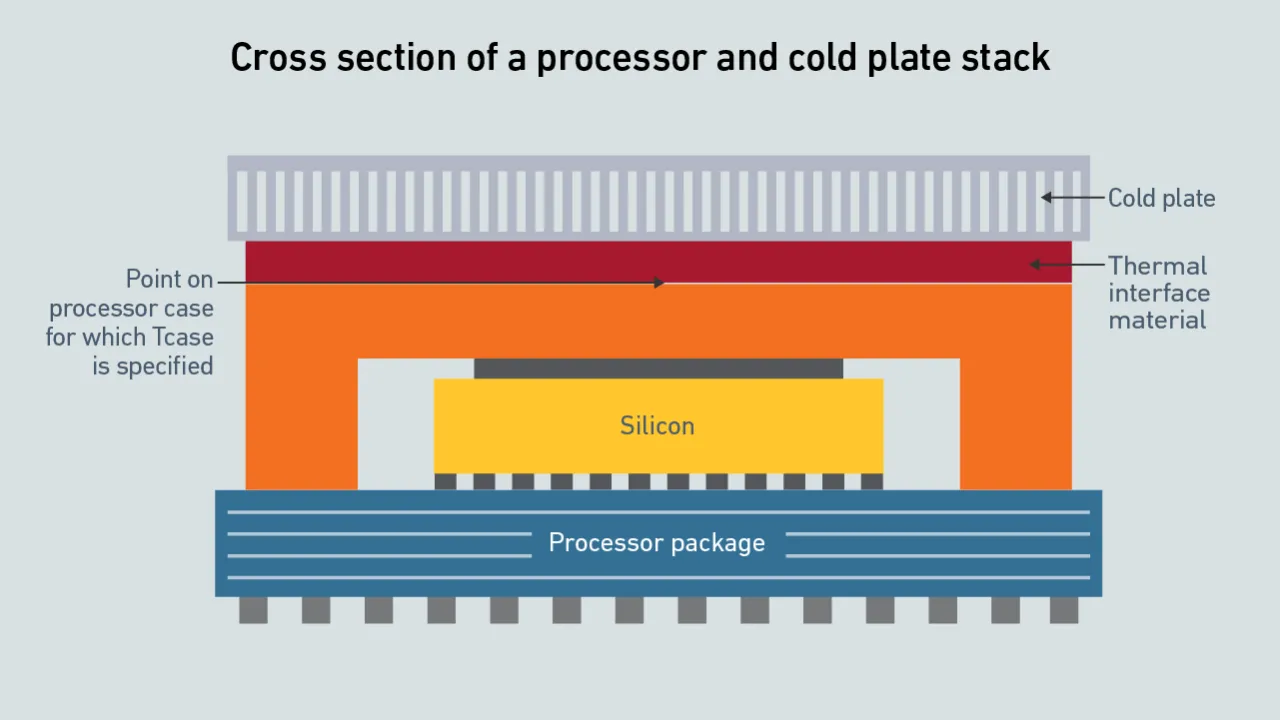

Performant cooling requires a full-system approach to eliminate thermal bottlenecks. Extreme silicon TDPs and highly efficient cooling do not have to be mutually exclusive if the data center and chip vendors work together.

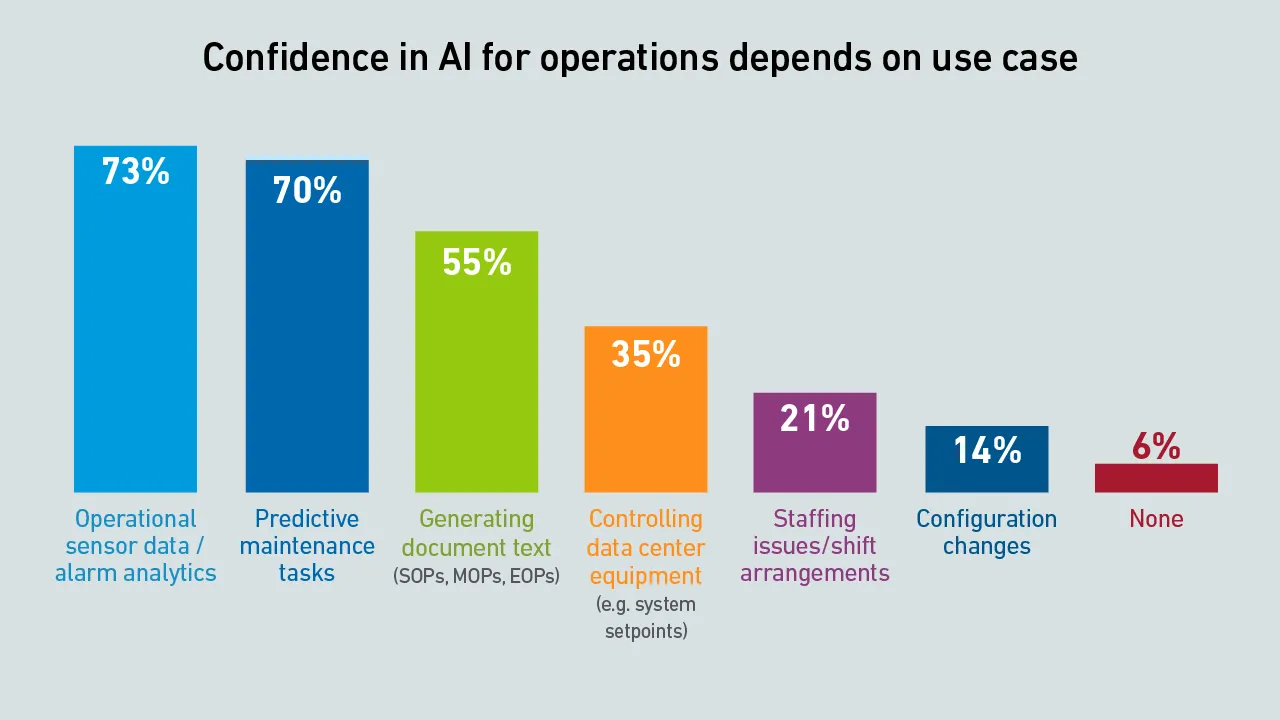

AI is changing how data centers operate, what began with algorithmic fine-tuning of chilled-water plants is now moving into the IT side of operations, closer to the load. But will operators ever trust AI enough to let it run the room?

Currently, the most straightforward way to support DLC loads in many data centers is to use existing air-cooling infrastructure combined with air-cooled CDUs.

Liquid-cooled colocation capacity remains niche, but demand is likely to grow. Colocation providers planning capacity for DLC need to address novel questions about individual tenant needs, operations and SLAs.

From on-prem AI to high-density IT, this webinar examined survey findings on how operators are preparing for what's next.

Most operators do not trust AI-based systems to control equipment in the data center - this has implications for software products that are already available, as well as those in development.

Rising IT power densities are pushing chilled water systems to their limits. AI-driven control offers predictive load management, optimized sequencing and stable delta-T under demanding conditions.

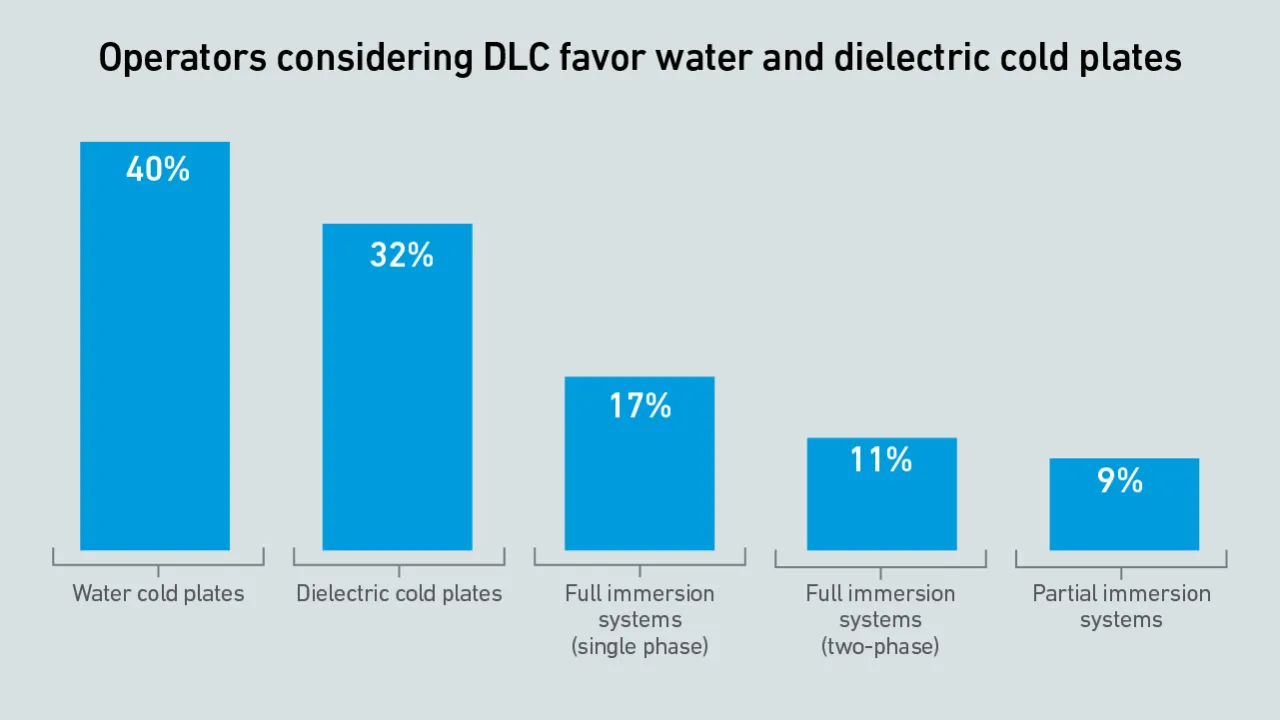

Water cold plates still lead DLC adoption - but more enterprise operators are considering dielectric cold plates than last year. The next DLC adopters may be amenable to multiple technologies, while remaining cautious about leak risks.

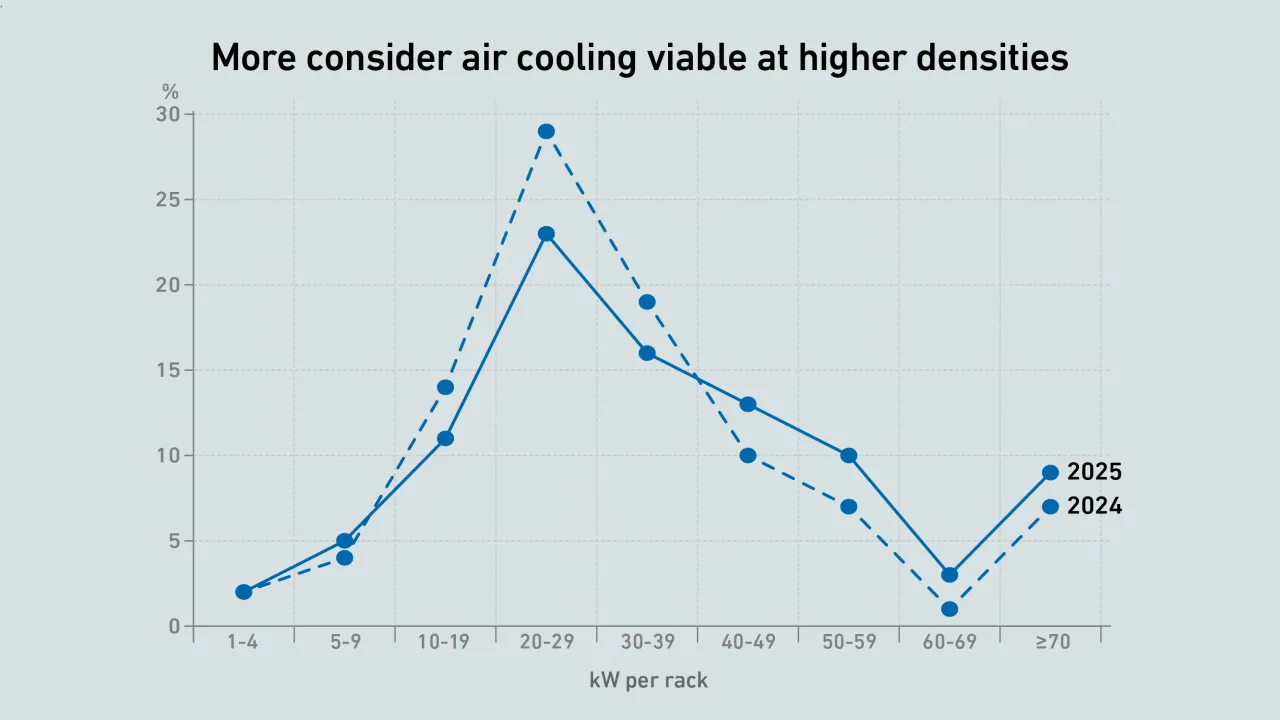

Against a backdrop of higher densities and the push toward liquid cooling, air remains the dominant choice for cooling IT hardware. As long as air cooling works, many see no reason to change - and more think it is viable at high densities.

Daniel Bizo

Daniel Bizo

Jacqueline Davis

Jacqueline Davis

Jay Dietrich

Jay Dietrich

Douglas Donnellan

Douglas Donnellan

Andy Lawrence

Andy Lawrence

Max Smolaks

Max Smolaks

Dr. Rand Talib

Dr. Rand Talib

Peter Judge

Peter Judge