By raising debt, building data centers and using colos, neoclouds shield hyperscalers from the financial and technological shocks of the AI boom. They share in the upside if demand grows, but are burdened with stranded assets if it stalls.

filters

Explore All Topics

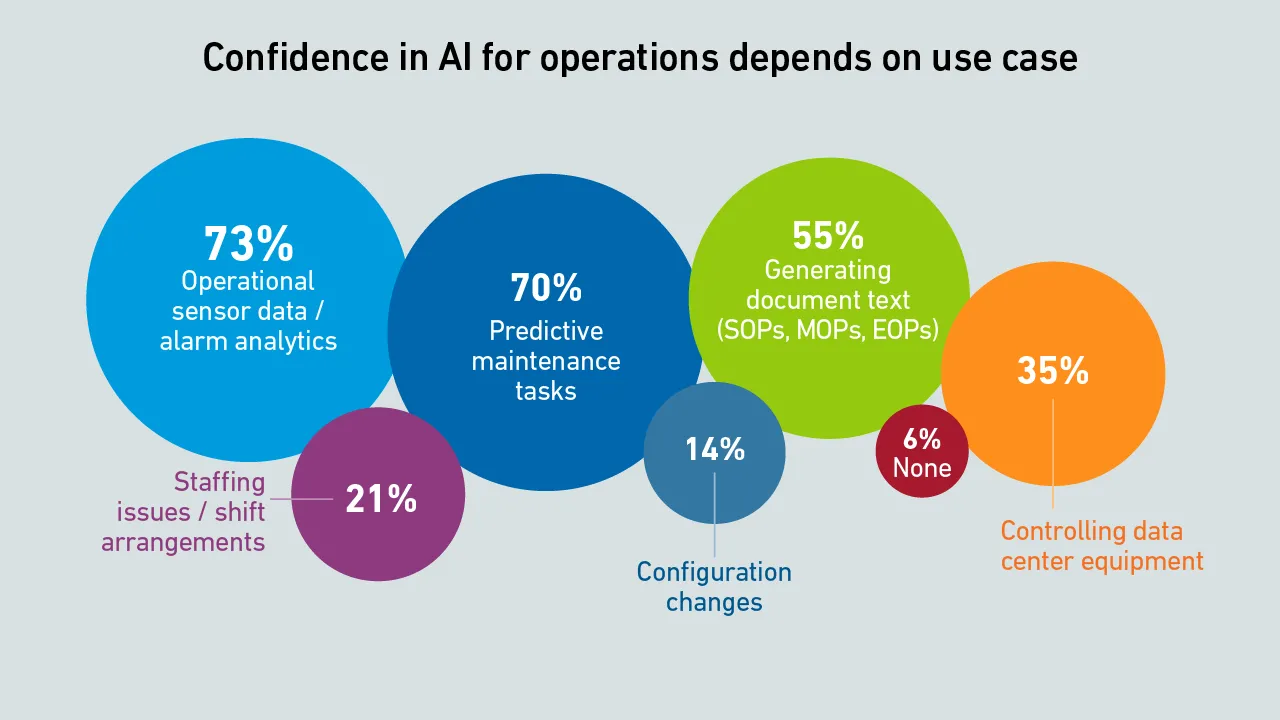

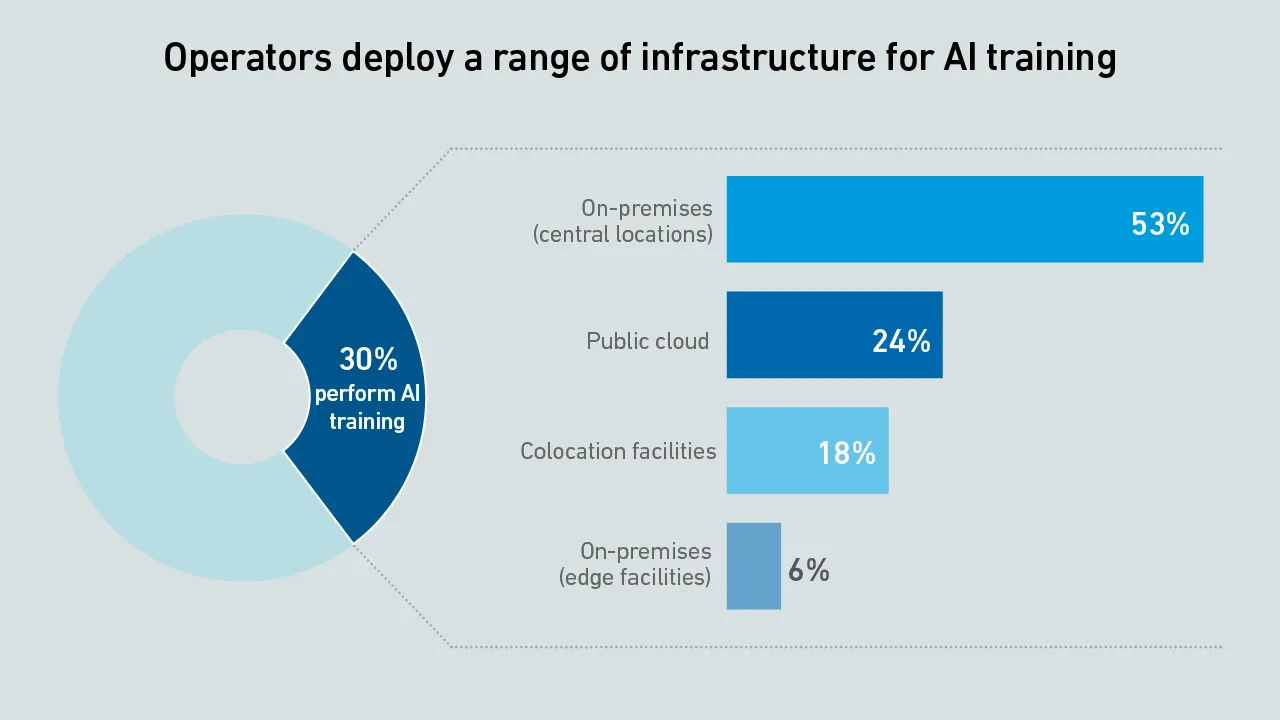

From on-prem AI to high-density IT, this webinar examined survey findings on how operators are preparing for what's next.

AWS has recently cut prices on a range of GPU-backed instances. These price reductions make it harder to justify an investment in dedicated AI infrastructure.

Several operators originally established to mine cryptocurrencies are now building hyperscale data centers for AI. How did this change happen?

The 15th edition of the Uptime Institute Global Data Center Survey highlights the experiences and strategies of data center owners and operators in the areas of resiliency, sustainability, efficiency, staffing, cloud and AI.

The data center industry is on the cusp of the hyperscale AI supercomputing era, where systems will be more powerful and denser than the cutting-edge exascale systems of today. But will this transformation really materialize?

AI training can strain power distribution systems and shorten hardware life - especially in data centers not built for dynamic workloads. Many operators may be underestimating these risks during design and capacity planning.

Training large transformer models is different from all other workloads - data center operators need to reconsider their approach to both capacity planning and safety margins across their infrastructure.

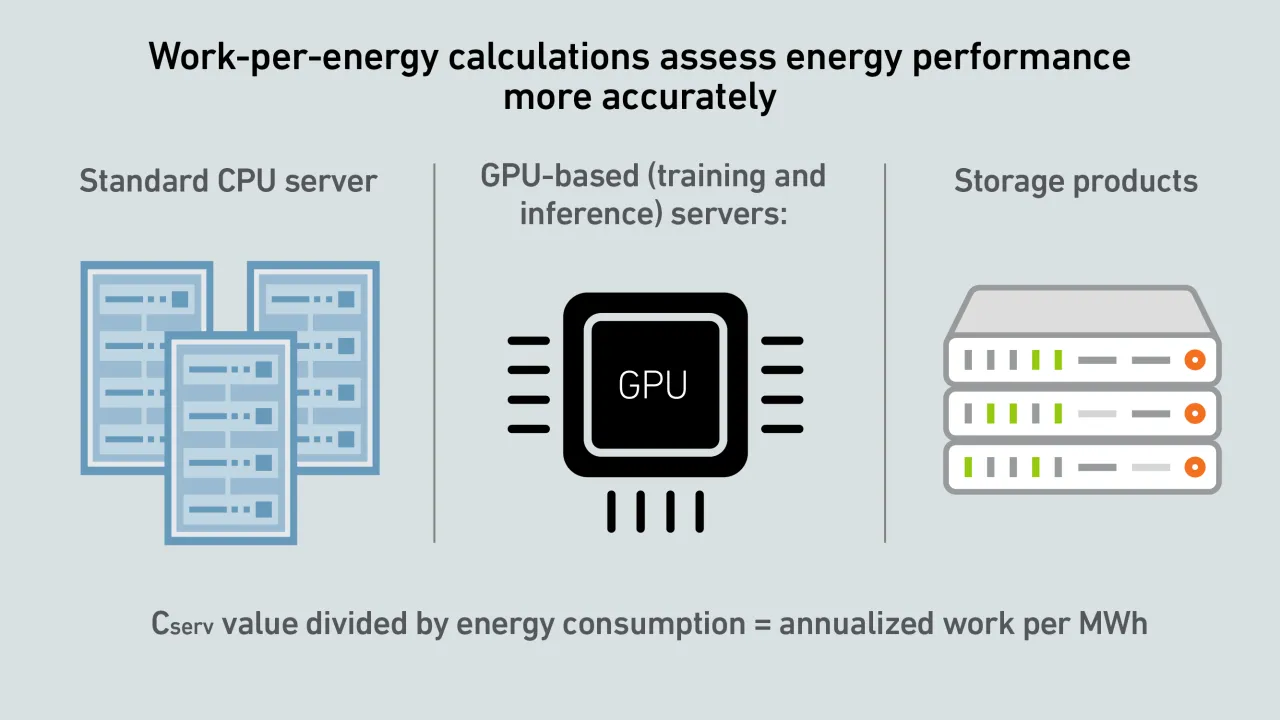

A report by Uptime's Sustainability and Energy Research Director Jay Dietrich merits close attention; it outlines a way to calculate data center IT work relative to energy consumption. The work is supported by Uptime Institute and The Green Grid.

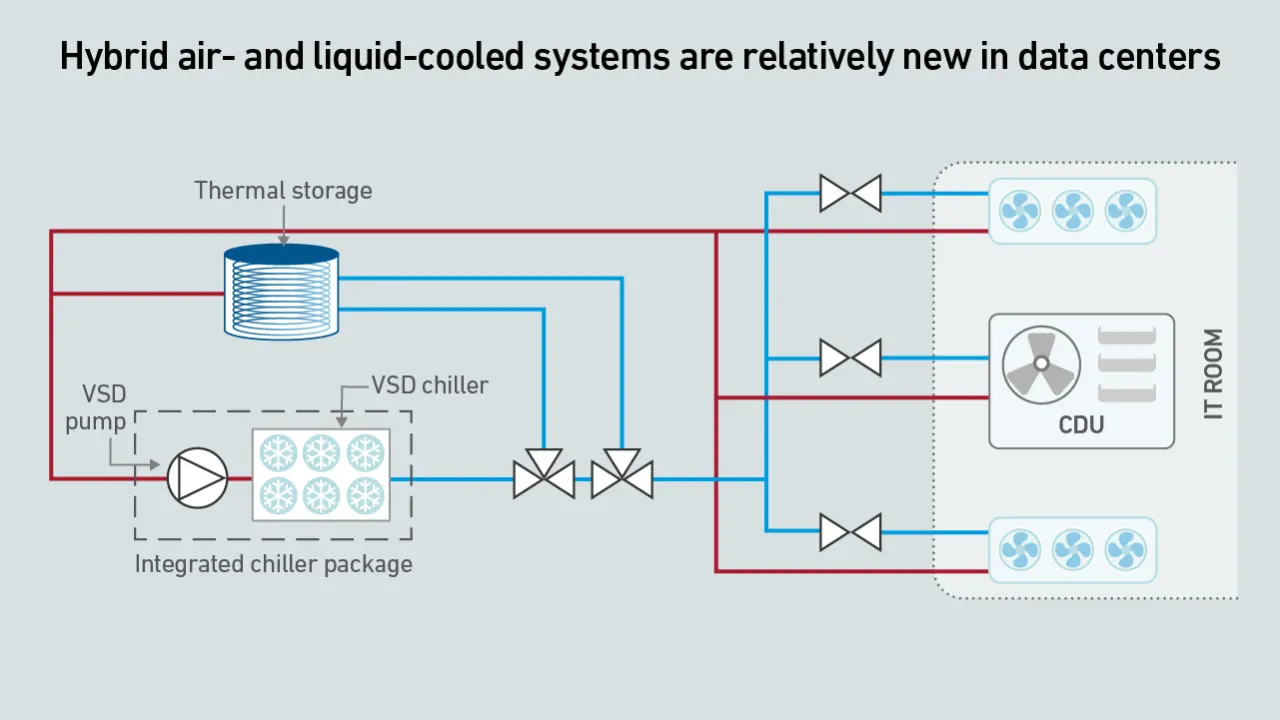

To meet the demands of unprecedented rack power densities, driven by AI workloads, data center cooling systems need to evolve and accommodate a growing mix of air and liquid cooling technologies.

Today, GPU designers pursue outright performance over power efficiency. This is a challenge for inference workloads that prize efficient token generation. GPU power management features can help, but require more attention.

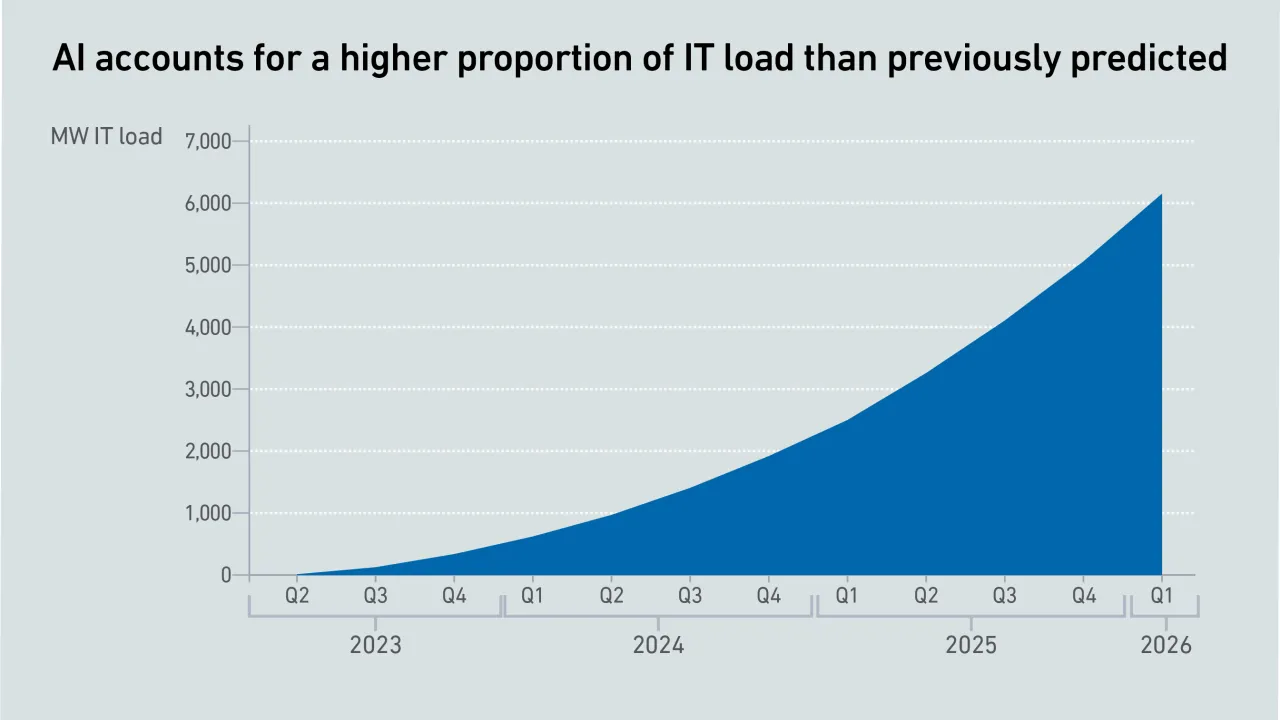

The past year warrants a revision of generative AI power estimates, as GPU shipments have skyrocketed, despite some offsetting factors. However, uncertainty remains high, with no clarity on the viability of these spending levels.

As AI workloads surge, managing cloud costs is becoming more vital than ever, requiring organizations to balance scalability with cost control. This is crucial to prevent runaway spend and ensure AI projects remain viable and profitable.

The US government's AI compute diffusion rules, introduced in January 2025, will be rescinded - with new rules coming. It warns any dealings linked to advanced Chinese chips will require US export authorization. Operators still face tough demands.

In the US, the politicization of data center development is underway, driven by its impact on power prices. As state governments seek ways to protect consumers, operators will need to engage in the policy debate.

Dr. Owen Rogers

Dr. Owen Rogers

Max Smolaks

Max Smolaks

Daniel Bizo

Daniel Bizo

Douglas Donnellan

Douglas Donnellan

Andy Lawrence

Andy Lawrence

Peter Judge

Peter Judge

Jacqueline Davis

Jacqueline Davis

Rose Weinschenk

Rose Weinschenk

Dr. Tomas Rahkonen

Dr. Tomas Rahkonen