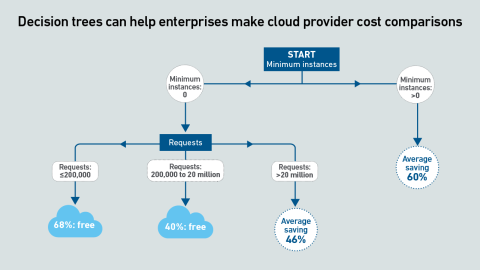

Serverless container services enable rapid, per-second scalability, which is ideal for AI inference. However, inconsistent and opaque pricing metrics hinder comparisons. This pricing tool compares the cost of services across providers.

filters

Explore All Topics

Serverless container services enable rapid scalability, which is ideal for AI inference. However, inconsistent and opaque pricing metrics hinder comparisons. This report uses machine learning to derive clear guidance by means of decision trees.

Current geopolitical tensions are eroding some European organizations’ confidence in the security of hyperscalers; however, moving away from them entirely is not practically feasible.

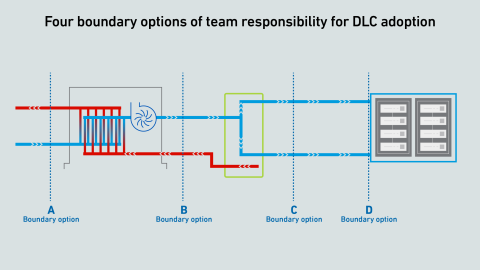

Direct liquid cooling challenges the common “line of demarcation” for responsibilities between facilities and IT teams. Operators lack a consensus on a single replacement model—and this fragmentation may persist for several years.

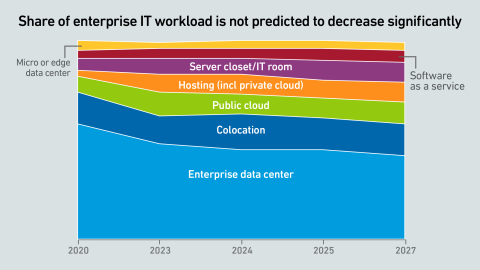

Although the share of processing handled by the corporate or enterprise sector has declined over the years, it has never disappeared. But there are signs that it may reclaim a more central role.

As AI workloads surge, managing cloud costs is becoming more vital than ever, requiring organizations to balance scalability with cost control. This is crucial to prevent runaway spend and ensure AI projects remain viable and profitable.

Tensions between team members of different ranks or departments can inhibit effective communication in a data center, putting uptime at risk. This can be avoided by adopting proven communication protocols from other mission-critical industries.

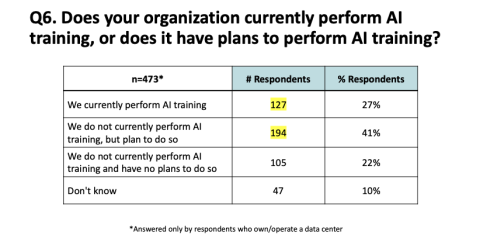

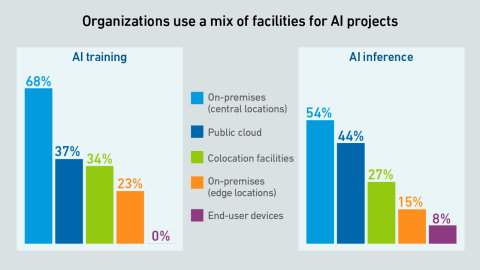

Organizations currently performing AI training and inference leverage resources from a mix of facilities. However, most prioritize on-premises data centers, driven by data sovereignty needs and access to hardware.

In today’s quickly evolving market, staying up to date on industry developments can be challenging. Join us for a deep dive on how transforming your operations can make your organization more resilient to evolving technologies, staffing, and…

How far can we go with air? Uptime experts discuss and answer questions on cooling strategies and debate the challenges and trade-offs with efficiency and costs.Please watch this latest entry in the Uptime Intelligence Client Webinar series. The…

Chinese large language model DeepSeek has shown that state of the art generative AI capability may be possible at a fraction of the cost previously thought.

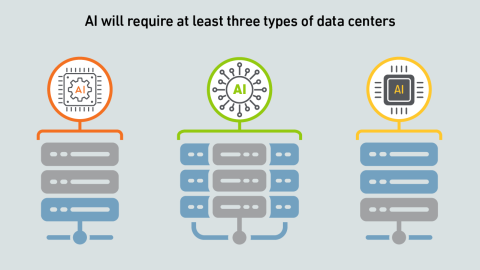

AI is not a uniform workload — the infrastructure requirements for a particular model depend on a multitude of factors. Systems and silicon designers envision at least three approaches to developing and delivering AI.

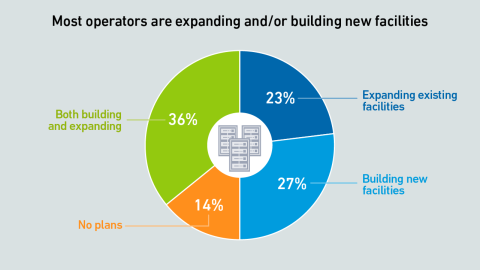

This report highlights some of the findings from the Uptime Institute Capacity Trends and Cloud Survey 2024. Findings offer insight into what is driving capacity expansion.

Results from Uptime Institute's 2025 AI Infrastructure Survey (n=1,062) focus on the data center infrastructure currently used or being planned to use to host AI Training and AI Inference, as well as future industry outlooks on the usage of AI. The…

Agentic AI offers enormous potential to the data center industry over the next decade. But are the benefits worth the inevitable risks?

Owen Rogers

Owen Rogers

Jacqueline Davis

Jacqueline Davis

Andy Lawrence

Andy Lawrence

Rose Weinschenk

Rose Weinschenk

Douglas Donnellan

Douglas Donnellan

Madeleine Kudritzki

Madeleine Kudritzki

Ryan Orr

Ryan Orr

Daniel Bizo

Daniel Bizo

Tomas Rahkonen

Tomas Rahkonen

Muhammad Naveed Saeed

Muhammad Naveed Saeed

John O'Brien

John O'Brien

Max Smolaks

Max Smolaks

Paul Carton

Paul Carton

Anthony Sbarra

Anthony Sbarra

Laurie Williams

Laurie Williams