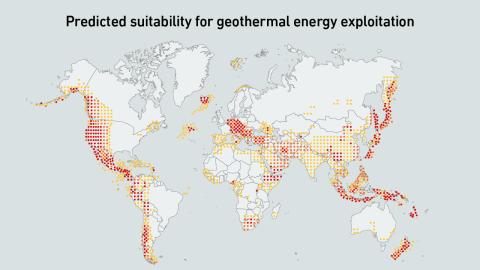

Underground hot rocks are emerging as a source of firm, low-carbon power for data centers, with new techniques expanding viable locations. Compared with nuclear, geothermal may be better positioned to support planned data center growth.

filters

Explore All Topics

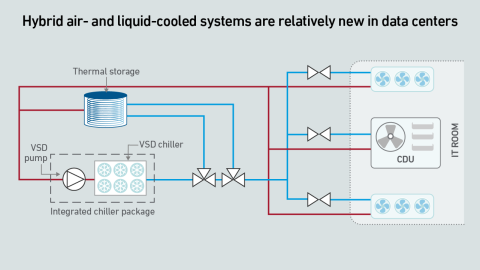

To meet the demands of unprecedented rack power densities, driven by AI workloads, data center cooling systems need to evolve and accommodate a growing mix of air and liquid cooling technologies.

As AI workloads surge, managing cloud costs is becoming more vital than ever, requiring organizations to balance scalability with cost control. This is crucial to prevent runaway spend and ensure AI projects remain viable and profitable.

Digital twins are increasingly valued in complex data center applications, such as designing and managing facilities for AI infrastructure. Digitally testing and simulating scenarios can reduce risk and cost, but many challenges remain.

While AI infrastructure build-out may focus on performance today, over time data center operators will need to address efficiency and sustainability concerns.

AI vendors claim that “reasoning” can improve the accuracy and quality of the responses generated by LLMs, but this comes at a high cost. What does this mean for digital infrastructure?

Data center builders who need power must navigate changing rules, unpredictable demands — and be prepared to trade.

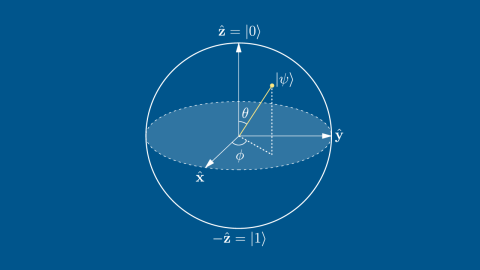

Quantum computing progress is slow; press releases often fail to convey the work required to make practical quantum computers a reality. Data center operators do not need to worry about quantum computing right now.

Chinese large language model DeepSeek has shown that state of the art generative AI capability may be possible at a fraction of the cost previously thought.

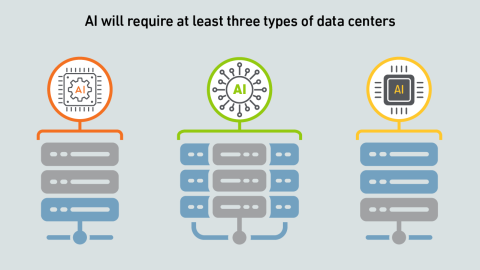

AI is not a uniform workload — the infrastructure requirements for a particular model depend on a multitude of factors. Systems and silicon designers envision at least three approaches to developing and delivering AI.

SMRs promise to usher in an era of dispatchable low-carbon energy. At present, however, their future is a blurry expanse of possibilities rather than a clear path ahead, as key questions of costs, timelines and operations remain.

Rapidly increasing electricity demand requires new generation capacity to power new data centers. What are some of the new, innovative power generation technology and procurement options being developed to meet capacity growth and what are their…

Agentic AI offers enormous potential to the data center industry over the next decade. But are the benefits worth the inevitable risks?

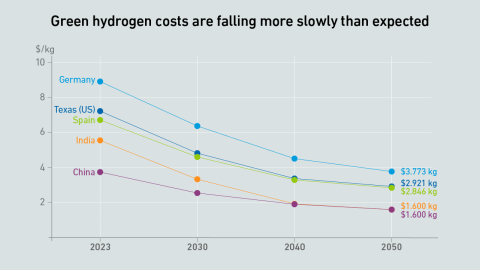

The cost of low-carbon green hydrogen will be prohibitive for primary power for many years. Some operators may adopt high-carbon (polluting) gray hydrogen ahead of transitioning to green hydrogen

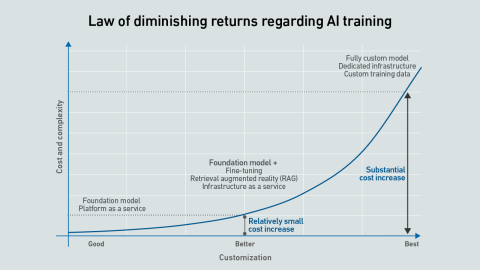

Dedicated AI infrastructure helps ensure data is controlled, compliant and secure, while models remain accurate and differentiated. However, this reassurance comes at a cost that may not be justified compared with cheaper options.

Peter Judge

Peter Judge

Tomas Rahkonen

Tomas Rahkonen

Owen Rogers

Owen Rogers

John O'Brien

John O'Brien

Max Smolaks

Max Smolaks

Andy Lawrence

Andy Lawrence

Daniel Bizo

Daniel Bizo

Jay Dietrich

Jay Dietrich