DLC was developed to handle high heat loads from densified IT. True mainstream DLC adoption remains elusive; it still awaits design refinements to address outstanding operational issues for mission-critical applications.

filters

Explore All Topics

The use of on-site natural gas power generation for big data centers will strain operators’ ability to meet net-zero carbon goals. To counter this, operators will increasingly explore, promote and in some cases deploy carbon capture and storage.

European national grid operators are advised to adopt proposed grid code requirements to protect their infrastructure from risks, such as data center activity, even though Commission action on the issue has stalled.

Cloud sovereignty is often treated as binary choice, but, in reality, it is a spectrum shaped by law, operations, technologies and supply chains. This framework explains the differences between sovereign public cloud options.

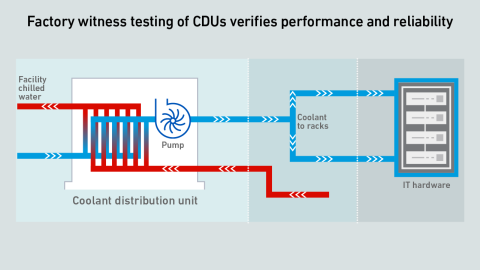

DLC introduces challenges at all levels of data center commissioning. Some end users accept CDUs without factory witness testing — a significant departure from the conventional commissioning script

Cybercriminals increasingly target supply chains as entry points for coordinated attacks; however, many vulnerabilities have been overlooked by operators and persist, despite their growing risk and severity.

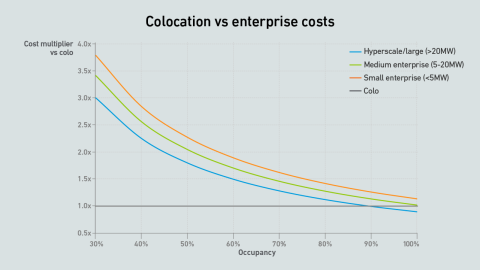

Simple arithmetic shows that newly constructed, large-scale private data centers with high occupancy rates can sometimes undercut colos on unit costs, but in most cases colos remain significantly cheaper.

Nvidia’s DSX proposal outlines a software-led model where digital twins, modular design and automation could shape how future gigawatt-scale AI facilities operate, even though the approach remains largely conceptual.

Several influential and well-resourced companies claim that the next wave of data centers will be deployed in space. Should this be taken seriously?

Nvidia’s DGX-Ready certification for colocation facilities has been around for nearly six years, yet what the program actually entails remains obscure.

Uptime Intelligence’s predictions for 2025 are revisited and reassessed with the benefit of hindsight.

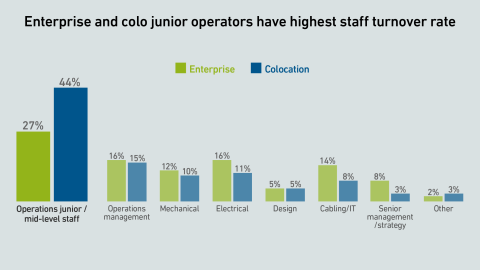

Colocation and enterprise companies have endured ongoing staffing issues, and accelerated growth in 2026 will strain hiring pools further. Strategies to address worker vacancies vary, but few have been successful.

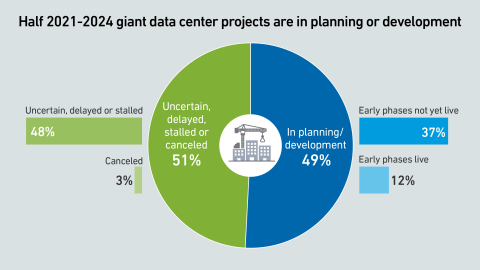

Giant data centers are being planned and built across the world to support AI, with successful projects forming the backbone of a huge expansion in capacity. But many are also uncertain, indicating risks and persistent headwinds.

Japan is expected to issue data center regulations in 2026: data centers that do not meet a 1.4 PUE limit will face penalties. However, enterprise data centers appear to have evaded the stipulations.

A last-minute revision to the European Commission’s plans to simplify regulations has brought some companies back under the scope for CSRD reporting.

Jacqueline Davis

Jacqueline Davis

Jay Dietrich

Jay Dietrich

Peter Judge

Peter Judge

Dr. Owen Rogers

Dr. Owen Rogers

John O'Brien

John O'Brien

Dr. Rand Talib

Dr. Rand Talib

Andy Lawrence

Andy Lawrence

Max Smolaks

Max Smolaks

Rose Weinschenk

Rose Weinschenk