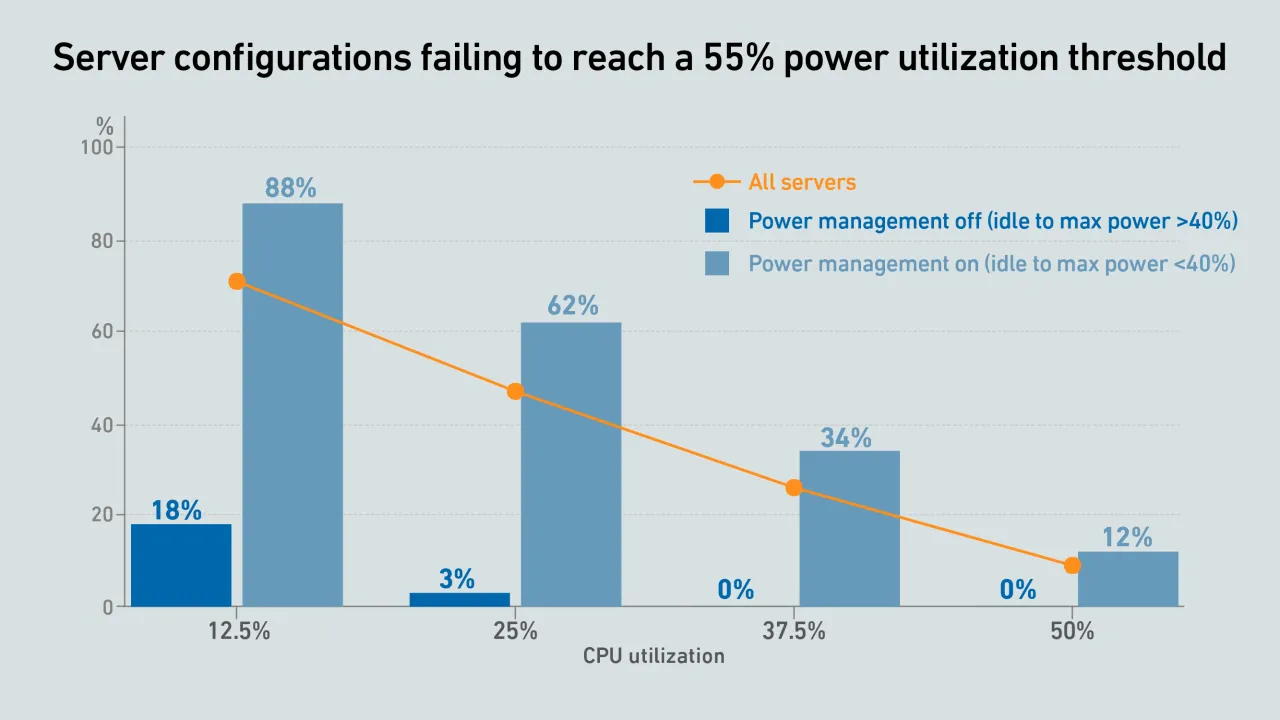

Uptime Intelligence analysis shows that minimum thresholds for IT power utilization misrepresents server work capacity utilization. These thresholds can unintentionally incentivize operators to disable server energy efficiency features.

filters

Explore All Topics

Concerns over rising electricity costs and environmental impact are driving local opposition to new data center projects in the US, prompting a growing number of cancellations.

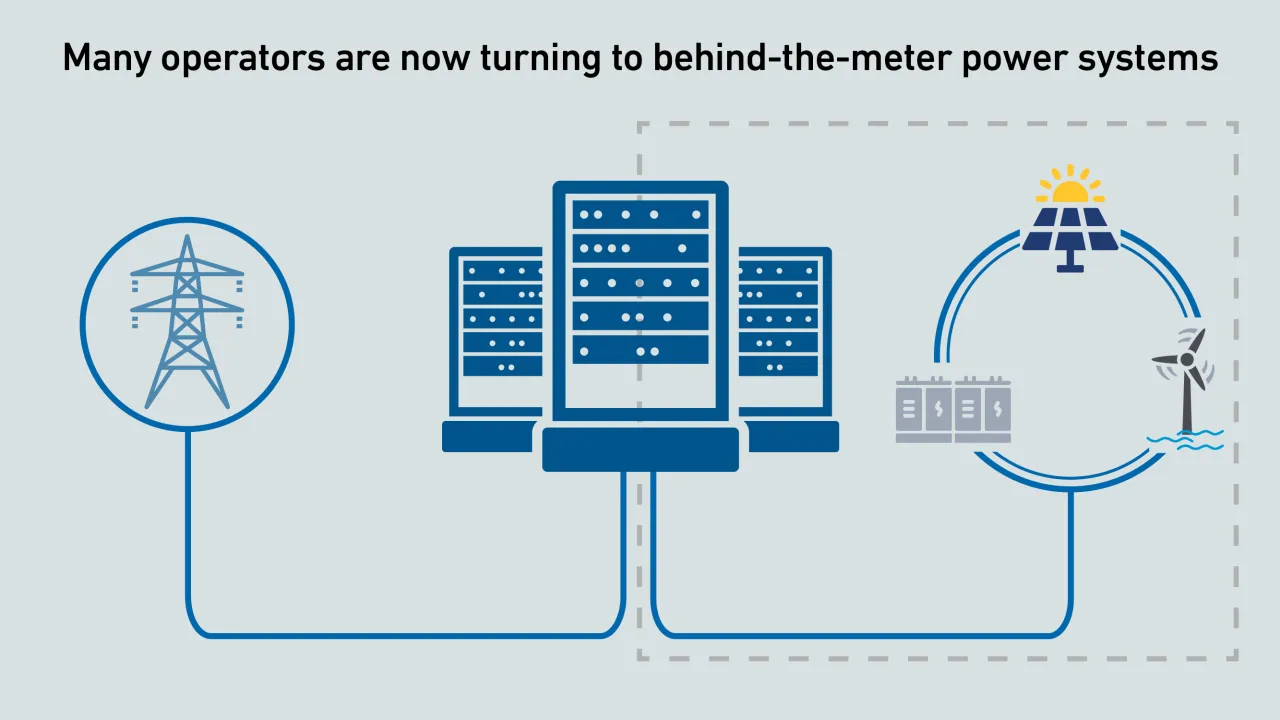

Operators are proposing behind-the-meter power systems to accelerate the buildout of new AI data center infrastructure. Executing this strategy requires regulatory changes in many jurisdictions and new data center design approaches.

While DCIM software has certain characteristics of a digital twin, persistent data quality and system interoperability issues mean a true digital twin for data center operations is still some way off.

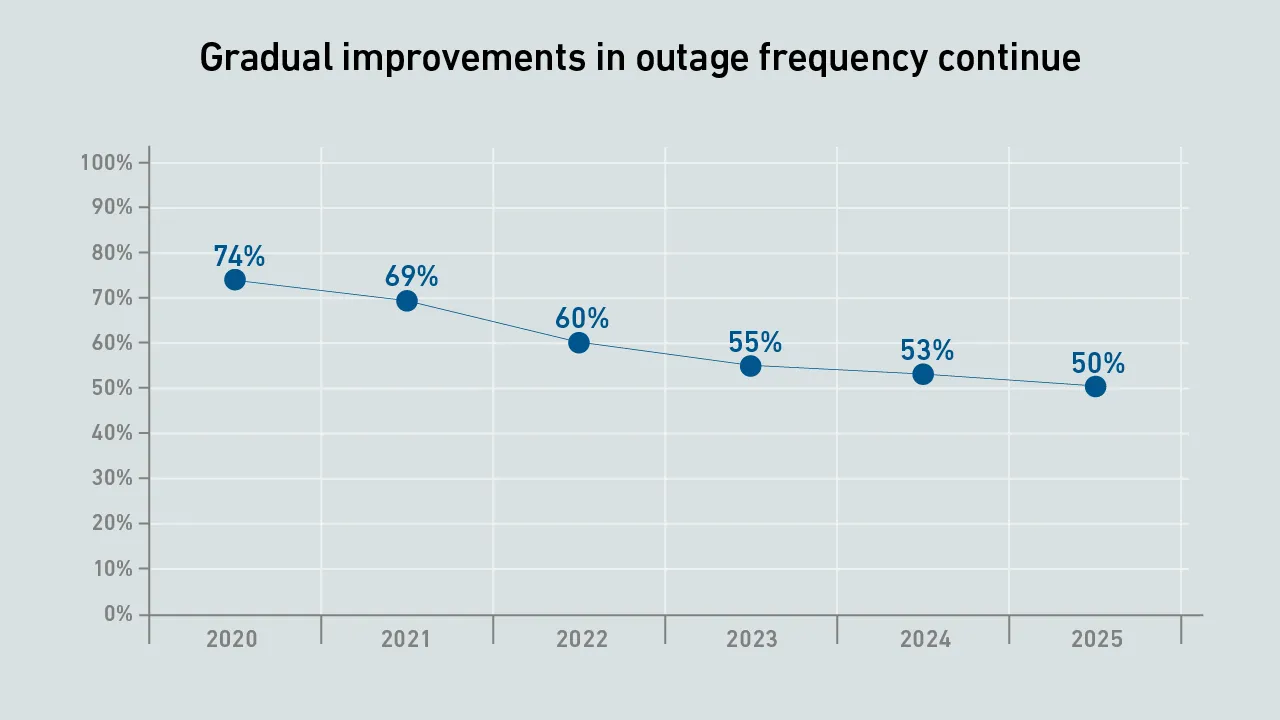

There is a growing list of reasons why designers and operators are re-examining issues around redundancy and resiliency — but for most, it is inescapable: current levels of resiliency will need to be maintained.

French data center operators must meet strict PUE and WUE targets from early 2027 to maintain their electricity tax benefits.

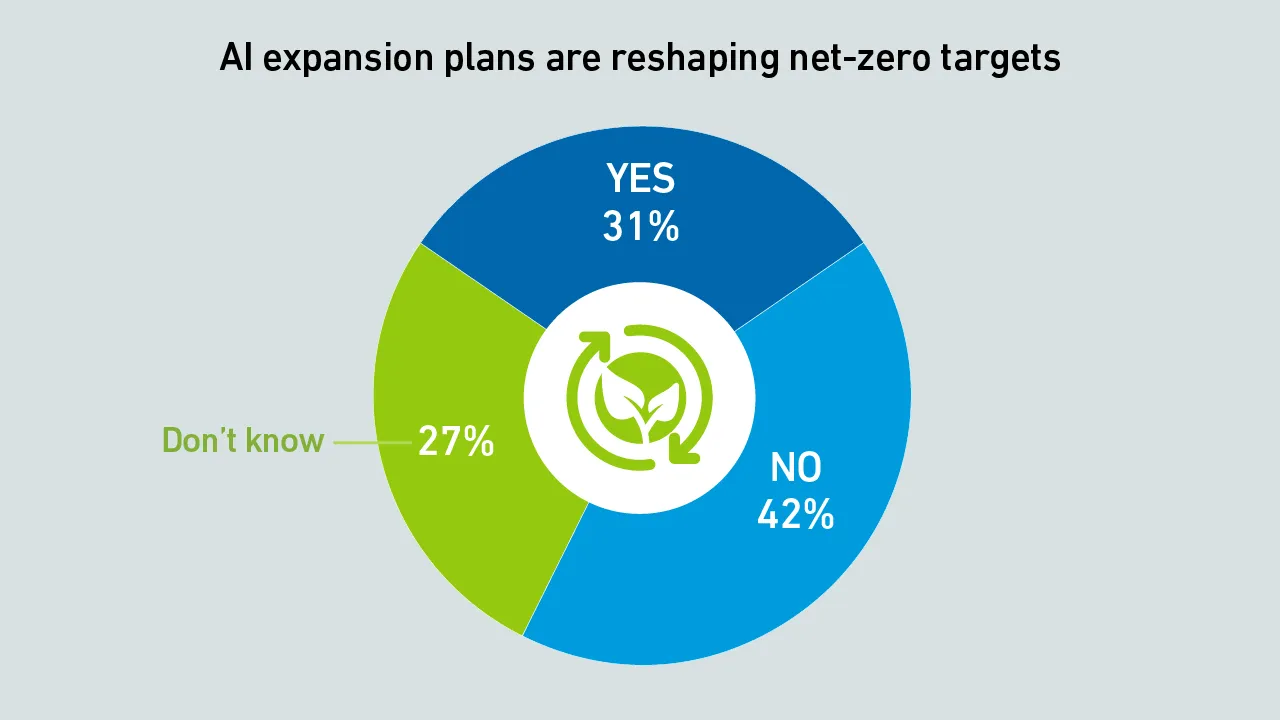

Surging demand for AI data centers is driving a shift to on-site natural gas power, even though operators admit this will delay the achievement of net-zero goals.

As AI adoption spreads, most data centers will not host large training clusters — but many will need to operate specialized systems to run inferencing close to applications.

Elon Musk's merger of his SpaceX aerospace company with his AI firm xAI has relit the thrusters under the concept of building big data centers in space. However, the technical difficulties involved may ultimately thwart his ambitions.

Ireland has issued new conditions for large grid loads and proposed new codes for data center connections; similar measures are expected to follow in other locations.

The shortage of DRAM and NAND chips caused by demands of AI data centers is likely to last into 2027, making every server more expensive.

Microsoft and other major campus operators are increasing transparency on new projects and committing to absorb their full development costs, but further commitments are needed to fully address public concerns.

Nvidia CEO Jensen Huang's comment that liquid-cooled AI racks will need no chillers created some turbulence — however, the concept of a chiller-free data center is an old one and is unlikely to suit most operators.

AI in data center operations is shifting from experimentation to early production use. Adoption remains cautious and bounded, focused on practical automation that supports operators rather than replacing them.

In 2026, enterprises will be more realistic about their use of generative AI, prioritizing simple use cases that deliver clear, timely value over those more innovative projects where returns — and successful outcomes — are less assured.

Dr. Tomas Rahkonen

Dr. Tomas Rahkonen

Max Smolaks

Max Smolaks

Jay Dietrich

Jay Dietrich

John O'Brien

John O'Brien

Andy Lawrence

Andy Lawrence

Peter Judge

Peter Judge

Dr. Owen Rogers

Dr. Owen Rogers

Daniel Bizo

Daniel Bizo

Dr. Rand Talib

Dr. Rand Talib