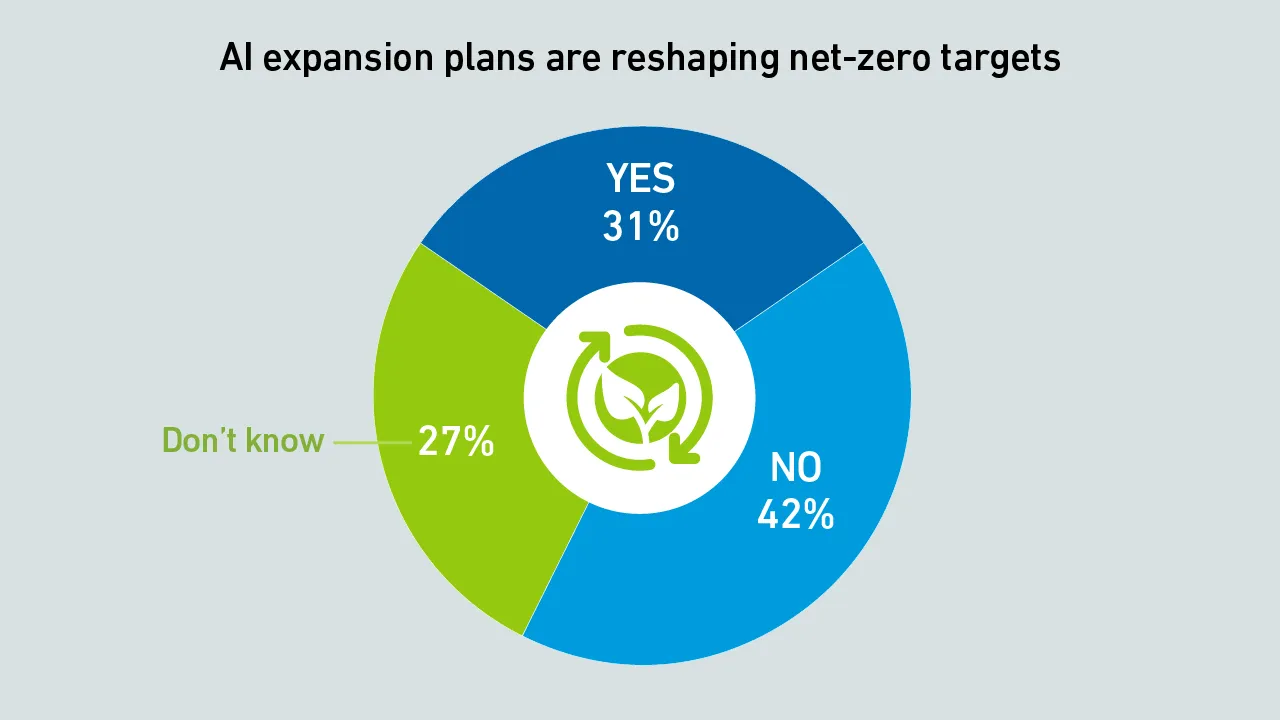

Surging demand for AI data centers is driving a shift to on-site natural gas power, even though operators admit this will delay the achievement of net-zero goals.

filters

Explore All Topics

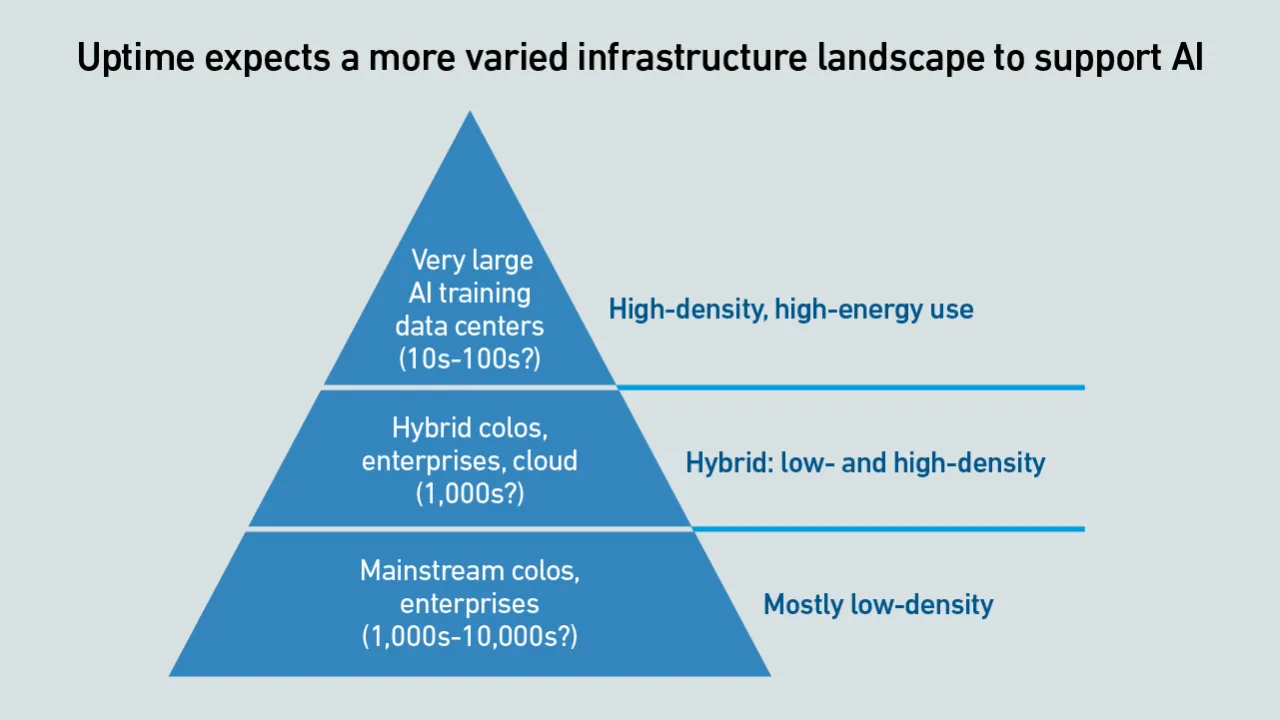

As AI adoption spreads, most data centers will not host large training clusters — but many will need to operate specialized systems to run inferencing close to applications.

Elon Musk's merger of his SpaceX aerospace company with his AI firm xAI has relit the thrusters under the concept of building big data centers in space. However, the technical difficulties involved may ultimately thwart his ambitions.

In 2026, enterprises will be more realistic about their use of generative AI, prioritizing simple use cases that deliver clear, timely value over those more innovative projects where returns — and successful outcomes — are less assured.

Investment in large-scale AI has accelerated the development of electrical equipment, which creates opportunities for data center designers and operators to rethink power architectures.

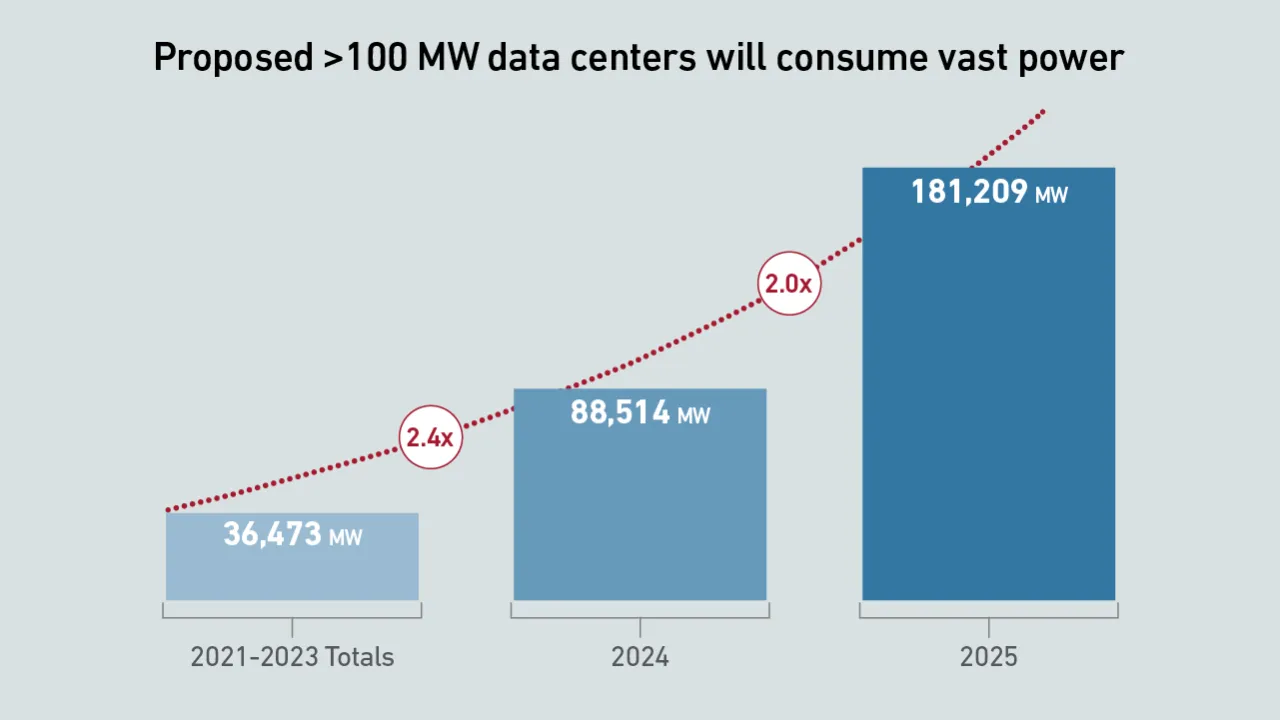

Data from Uptime Intelligence's giant data center analysis indicates that proposed power capacity and investment tied to giant data centers and campuses are at unprecedented levels.

DLC was developed to handle high heat loads from densified IT. True mainstream DLC adoption remains elusive; it still awaits design refinements to address outstanding operational issues for mission-critical applications.

Uptime Intelligence looks beyond the more obvious trends of 2026 and examines some of the latest developments and challenges shaping the data center industry.

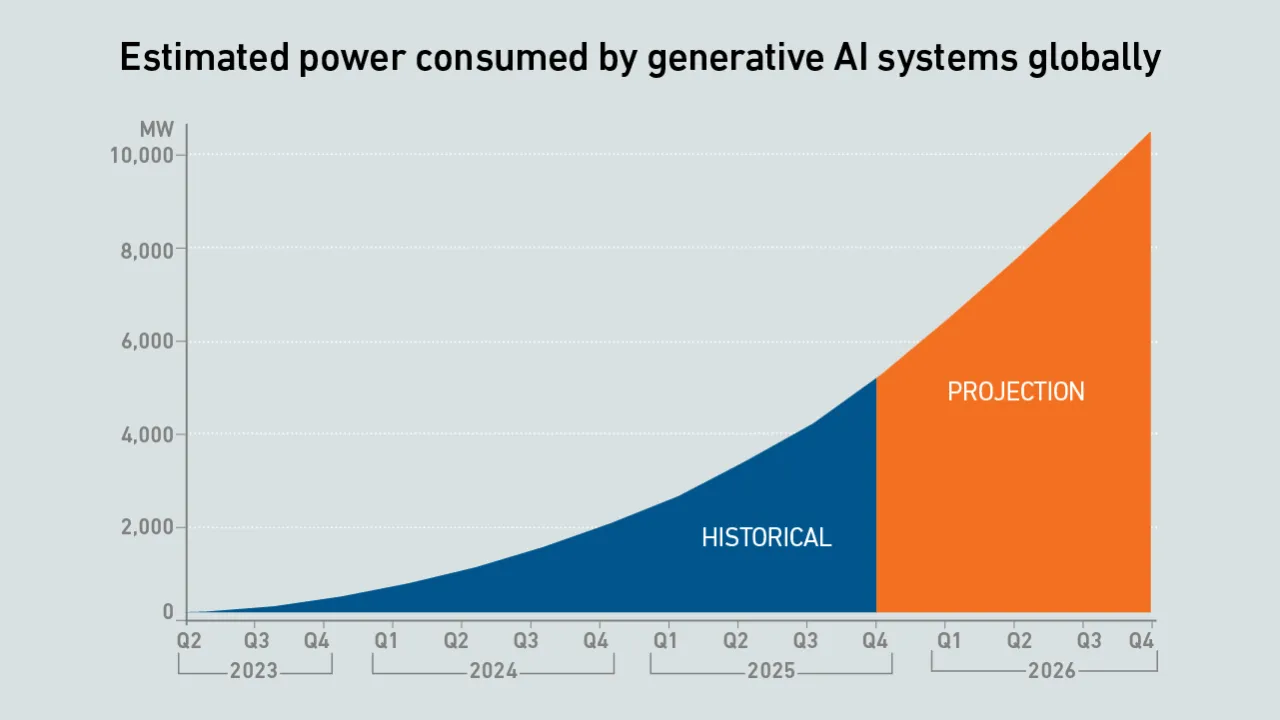

The updated model projects a doubling of power consumption by the end of 2026, with IT loads serving generative AI workloads breaking through 10 GW of capacity.

Financial institutions are embracing public cloud for some mission-critical workloads, and using it as a launchpad for AI development.

Consensus is growing that a major market "correction" is coming: while some infrastructure operators are highly exposed, others may stand to benefit.

As IT organizations embrace AI, data center facilities and colocation providers need to plan to deploy the supporting infrastructure - despite many uncertainties. Most, however, are still moving cautiously.

Research into neuromorphic computing could lead to the creation of smaller, faster and more energy-efficient AI accelerators. This would have a transformative impact on digital infrastructure.

AI is changing how data centers operate, what began with algorithmic fine-tuning of chilled-water plants is now moving into the IT side of operations, closer to the load. But will operators ever trust AI enough to let it run the room?

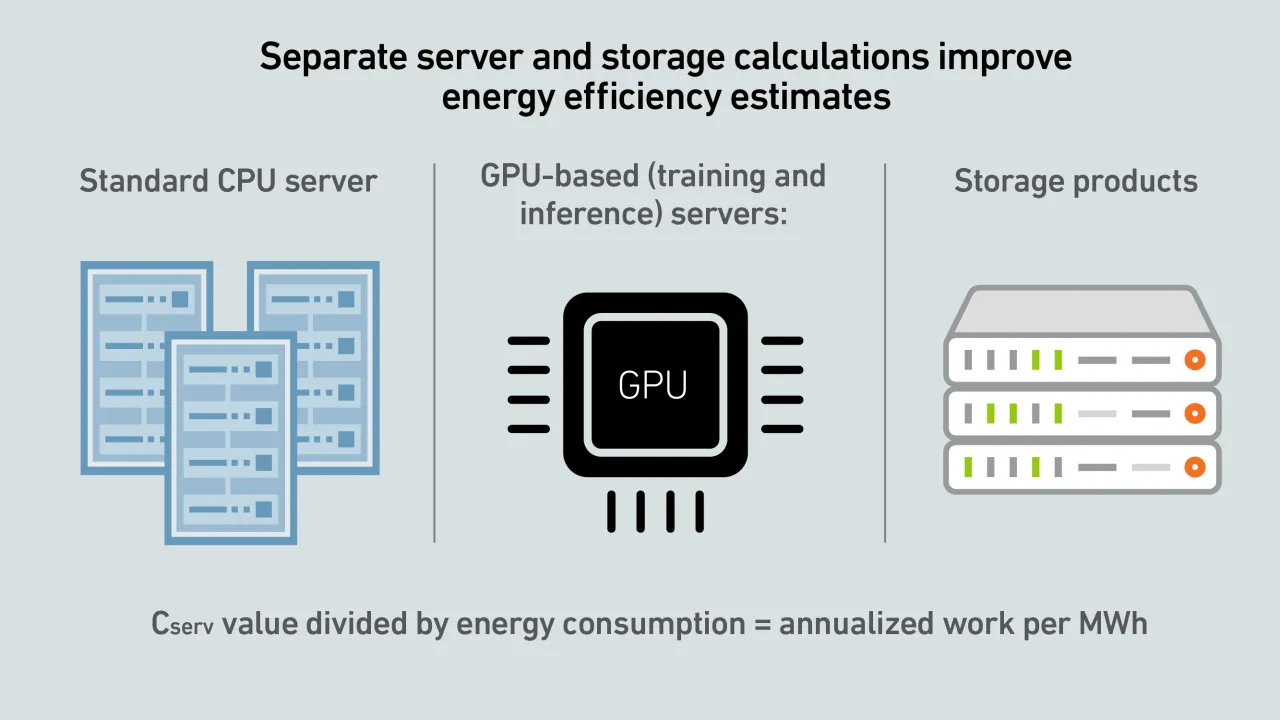

Large-scale AI training is an application of supercomputing. Supercomputing experts at the Yotta 2025 conference agree that operators need to optimize AI training efficiency and develop metrics to account for utilized power.

Peter Judge

Peter Judge

Dr. Owen Rogers

Dr. Owen Rogers

Andy Lawrence

Andy Lawrence

Daniel Bizo

Daniel Bizo

John O'Brien

John O'Brien

Jacqueline Davis

Jacqueline Davis

Jay Dietrich

Jay Dietrich

Douglas Donnellan

Douglas Donnellan

Max Smolaks

Max Smolaks

Dr. Rand Talib

Dr. Rand Talib