Microsoft and other major campus operators are increasing transparency on new projects and committing to absorb their full development costs, but further commitments are needed to fully address public concerns.

filters

Explore All Topics

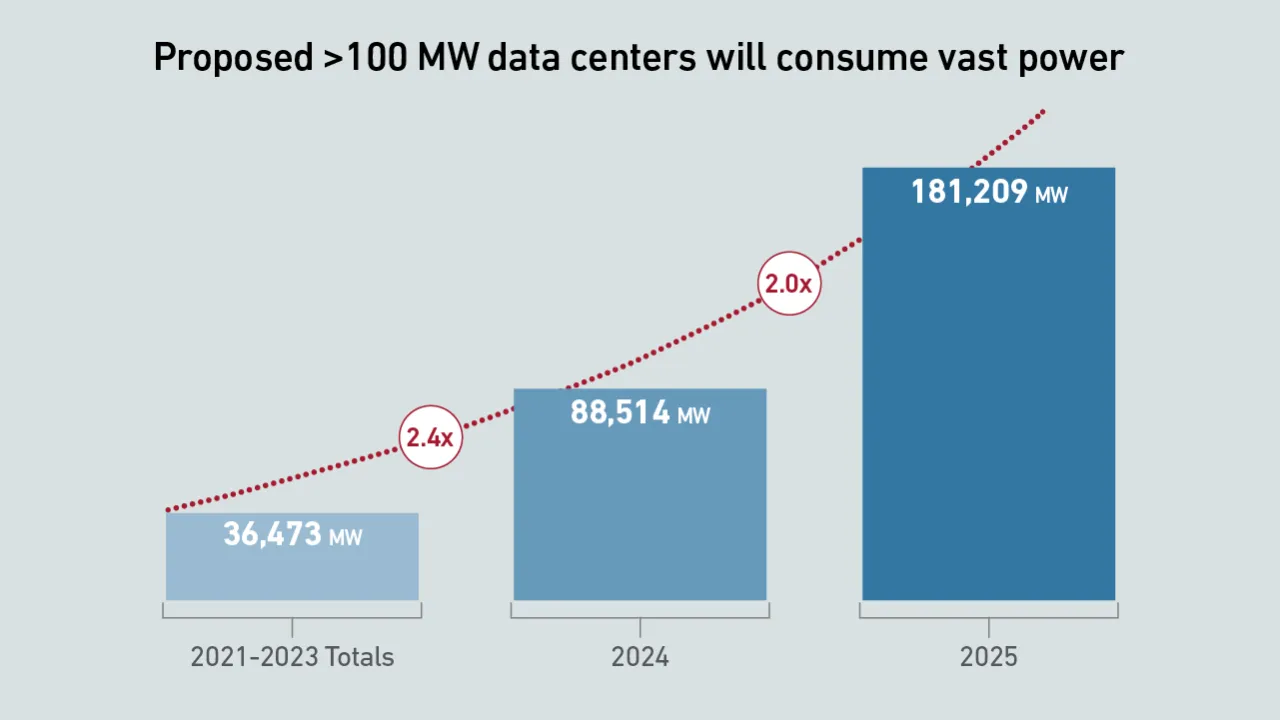

Data from Uptime Intelligence’s giant data center analysis indicates that proposed power capacity and investment tied to giant data centers and campuses are at unprecedented levels.

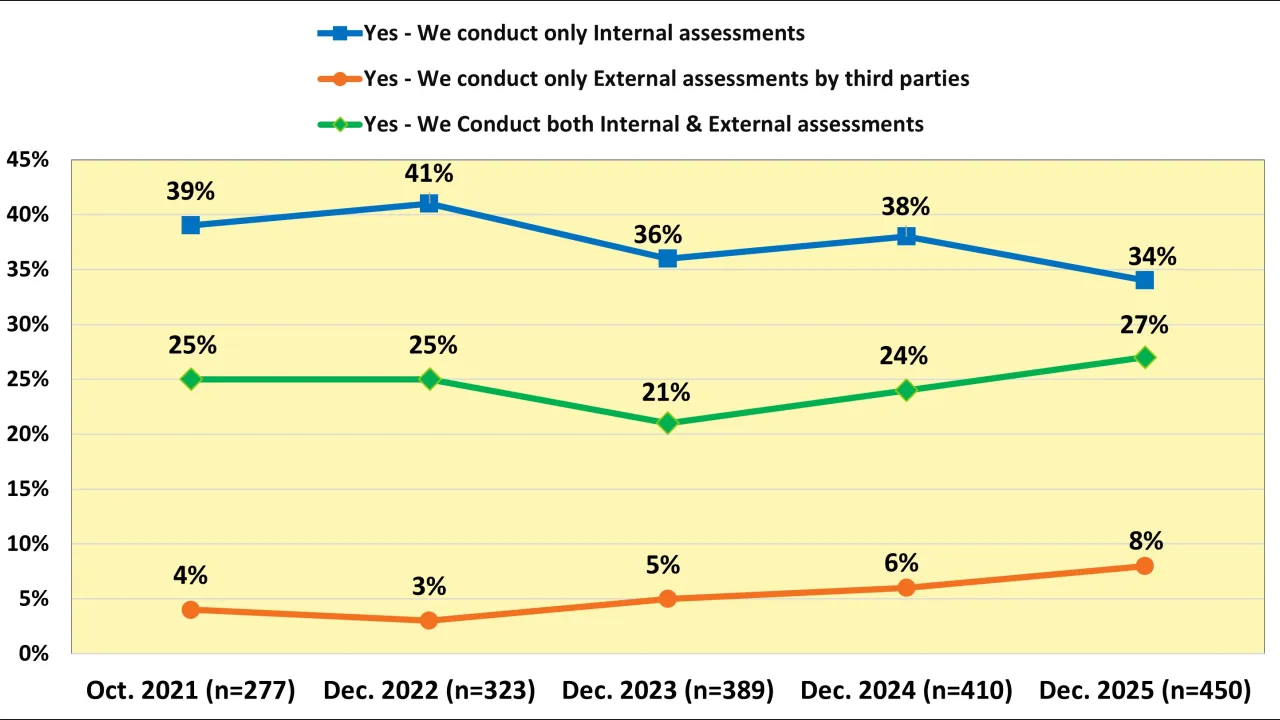

Uptime Institute's Sustainability and Climate Change Survey (n=1,054) benchmarks the data center industry in areas such as sustainability assessments, responses to extreme weather events, and how operators are handling regulatory requirements.The…

Uptime Intelligence looks beyond the more obvious trends of 2026 and examines some of the latest developments and challenges shaping the data center industry.

Nvidia’s DSX proposal outlines a software-led model where digital twins, modular design and automation could shape how future gigawatt-scale AI facilities operate, even though the approach remains largely conceptual.

The Uptime Intelligence research agenda includes a list of published and planned research reports for 2026, and is focused on Uptime Intelligence primary coverage areas: 1) power generation, distribution, energy storage; 2) data center management…

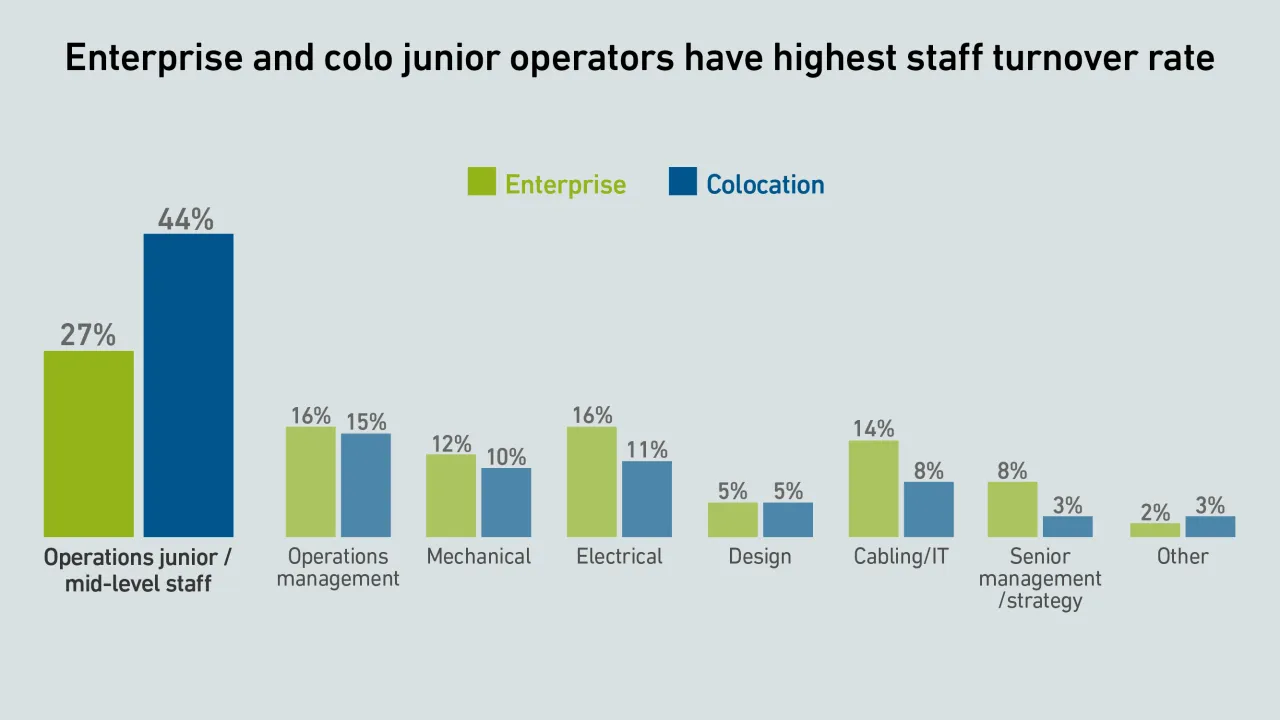

Colocation and enterprise companies have endured ongoing staffing issues, and accelerated growth in 2026 will strain hiring pools further. Strategies to address worker vacancies vary, but few have been successful.

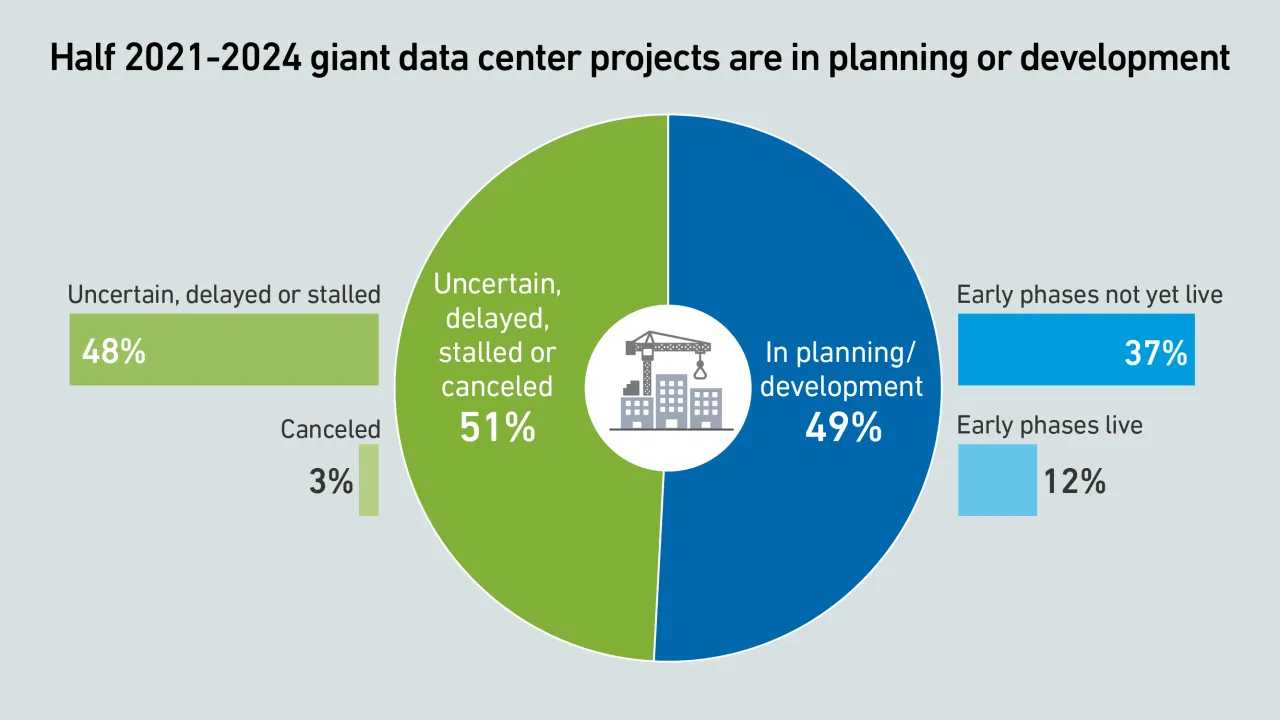

Giant data centers are being planned and built across the world to support AI, with successful projects forming the backbone of a huge expansion in capacity. But many are also uncertain, indicating risks and persistent headwinds.

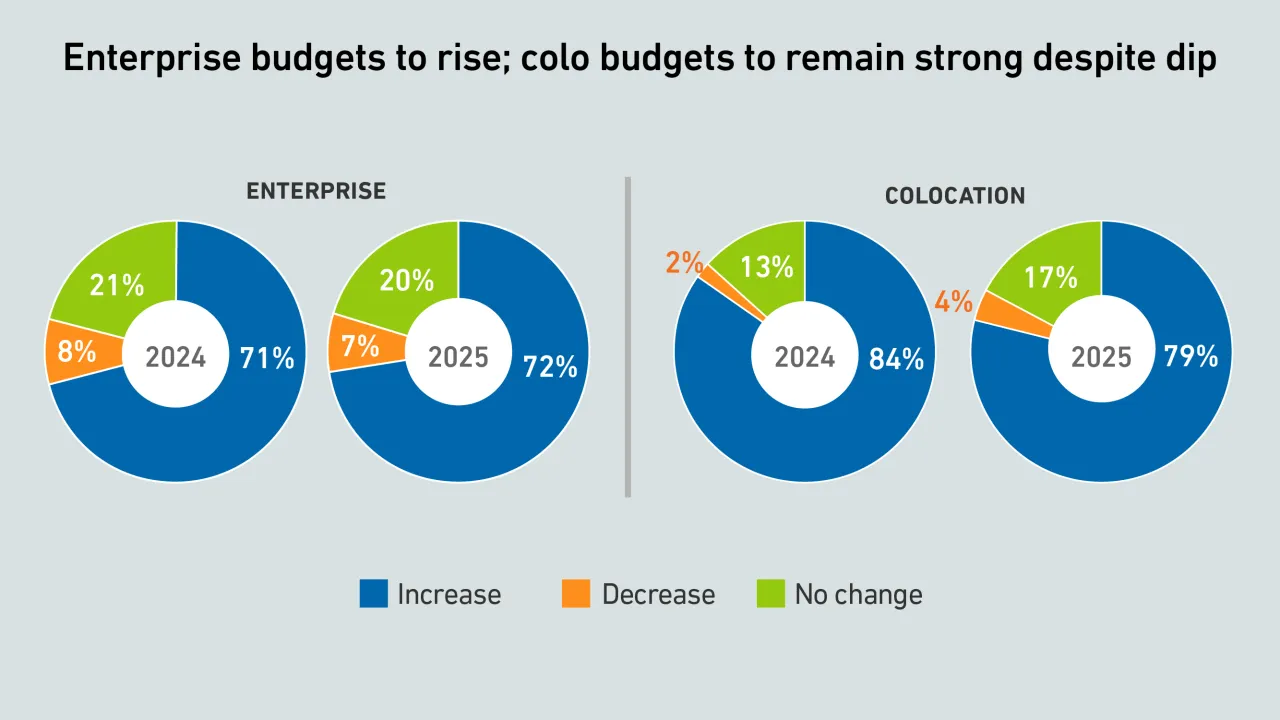

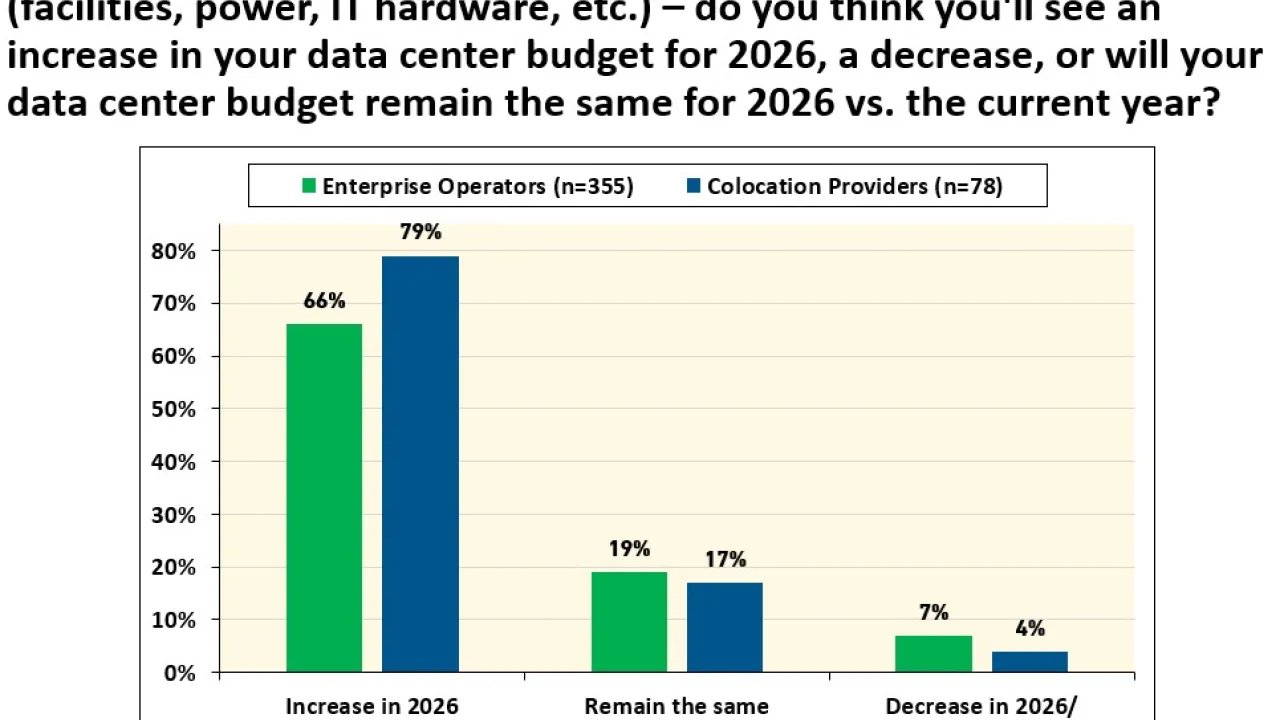

Enterprise and colocation operators continue to invest in growth heading into 2026. However, survey results suggest that strategies for balancing IT, capacity and workforce spending will diverge in the year ahead.

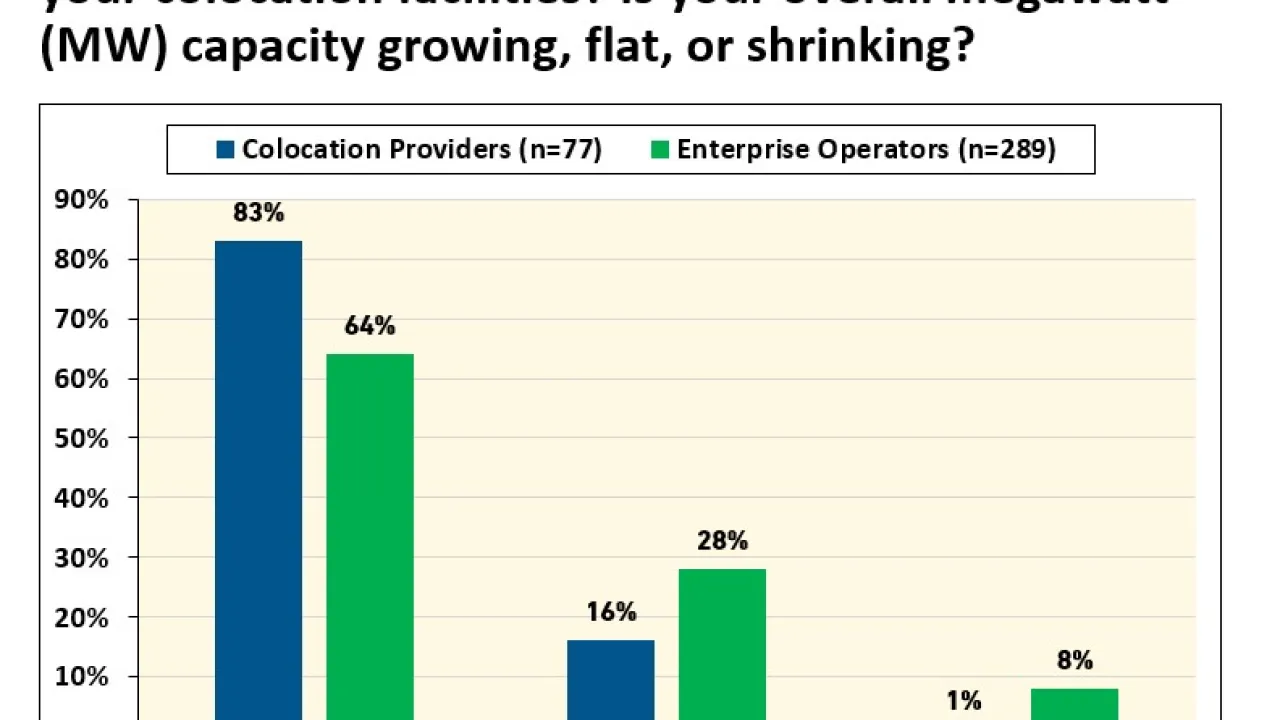

Uptime Institute's 2025 Service Providers and Capacity Survey (n=872) benchmarks the industry in the areas of public cloud, capacity in owned data centers, and capacity in colocation facilities.The attached data files below provide full results of…

Not all AI is the same; yet broad marketing claims often blur the line between automation and real intelligence. Understanding which AI types truly pose risks is essential in diminishing operator skepticism and fears of hallucinations.

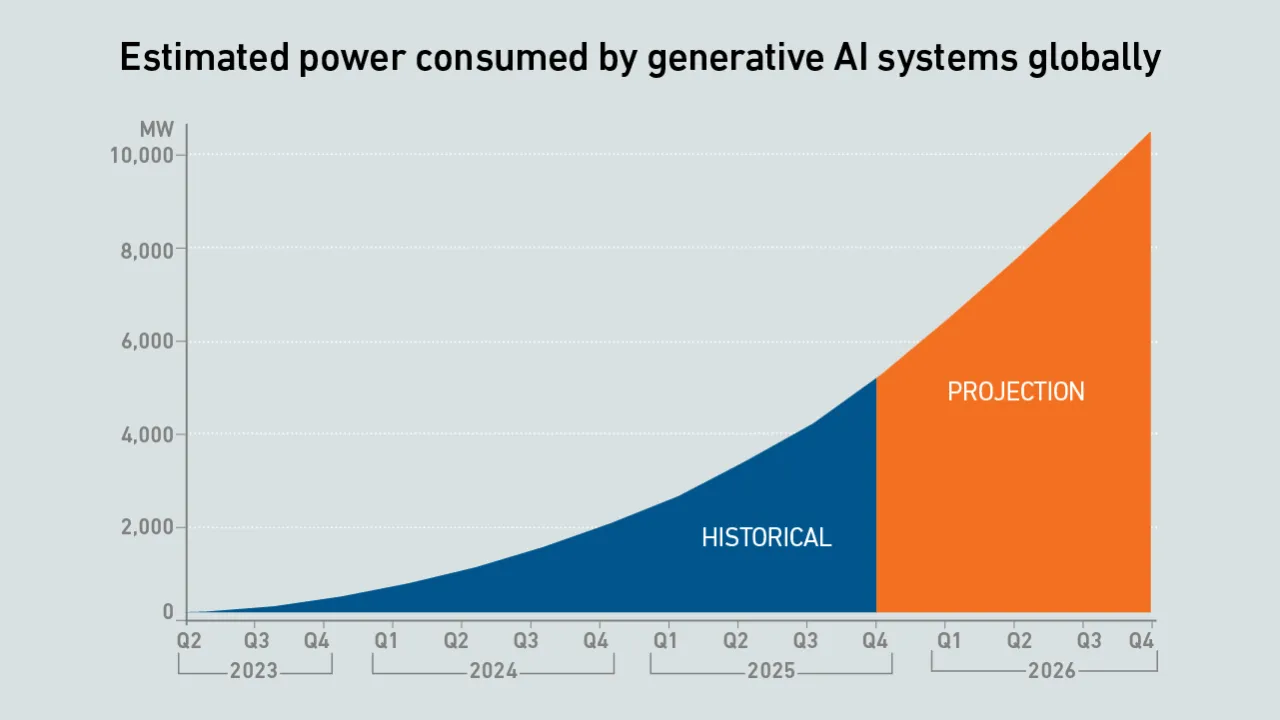

The updated model projects a doubling of power consumption by the end of 2026, with IT loads serving generative AI workloads breaking through 10 GW of capacity.

The European Commission published the final assessment of the data center label and minimum performance standards (MPS) in October. The final delegated act could require posting a label in 2026 or 2027, with MPS values likely to be 2 years away.

There is an expectation that AI will be useful in data center operations. For this to happen, software vendors need to deliver new products and use cases - and these are starting to appear more often.

Uptime Institute's latest Data Center Spending Survey (n=850) looks ahead to data center spending and budgets for 2026, as well as the current supply chain market in the industry.The attached data files below provide full results of the survey,…

Jay Dietrich

Jay Dietrich

John O'Brien

John O'Brien

Paul Carton

Paul Carton

Anthony Sbarra

Anthony Sbarra

Laurie Williams

Laurie Williams

Daniel Bizo

Daniel Bizo

Douglas Donnellan

Douglas Donnellan

Andy Lawrence

Andy Lawrence

Max Smolaks

Max Smolaks

Dr. Rand Talib

Dr. Rand Talib

Rose Weinschenk

Rose Weinschenk