The shortage of DRAM and NAND chips caused by demands of AI data centers is likely to last into 2027, making every server more expensive.

filters

Explore All Topics

Uptime Intelligence’s predictions for 2025 are revisited and reassessed with the benefit of hindsight.

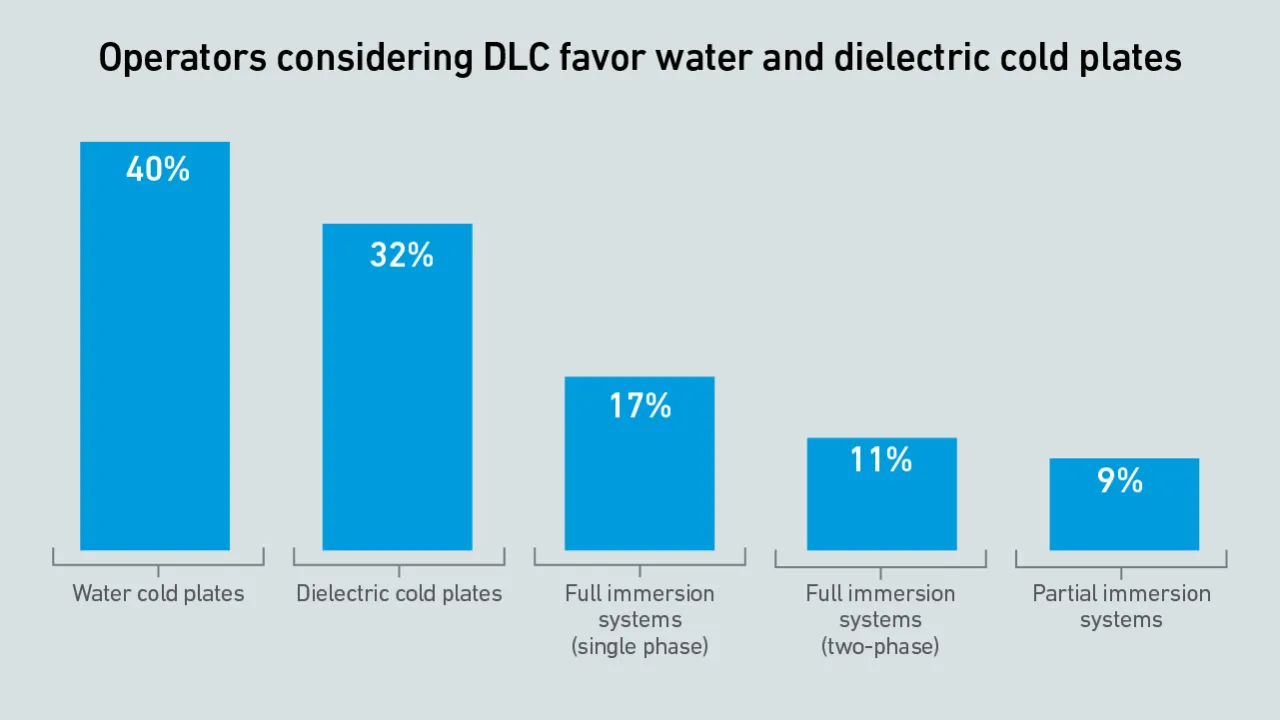

Water cold plates still lead DLC adoption - but more enterprise operators are considering dielectric cold plates than last year. The next DLC adopters may be amenable to multiple technologies, while remaining cautious about leak risks.

Results from Uptime Institute's 2025 Security Survey (n=982), now in its 3rd year, explore major cybersecurity issues facing data centers, as well as the IT and OT systems used to operate critical infrastructure.The attached data files below provide…

Direct liquid cooling adoption remains slow, but rising rack densities and the cost of maintaining air cooling systems may drive change. Barriers to integration include a lack of industry standards and concerns about potential system failures.

The data center industry is on the cusp of the hyperscale AI supercomputing era, where systems will be more powerful and denser than the cutting-edge exascale systems of today. But will this transformation really materialize?

Training large transformer models is different from all other workloads - data center operators need to reconsider their approach to both capacity planning and safety margins across their infrastructure.

The US government's AI compute diffusion rules, introduced in January 2025, will be rescinded - with new rules coming. It warns any dealings linked to advanced Chinese chips will require US export authorization. Operators still face tough demands.

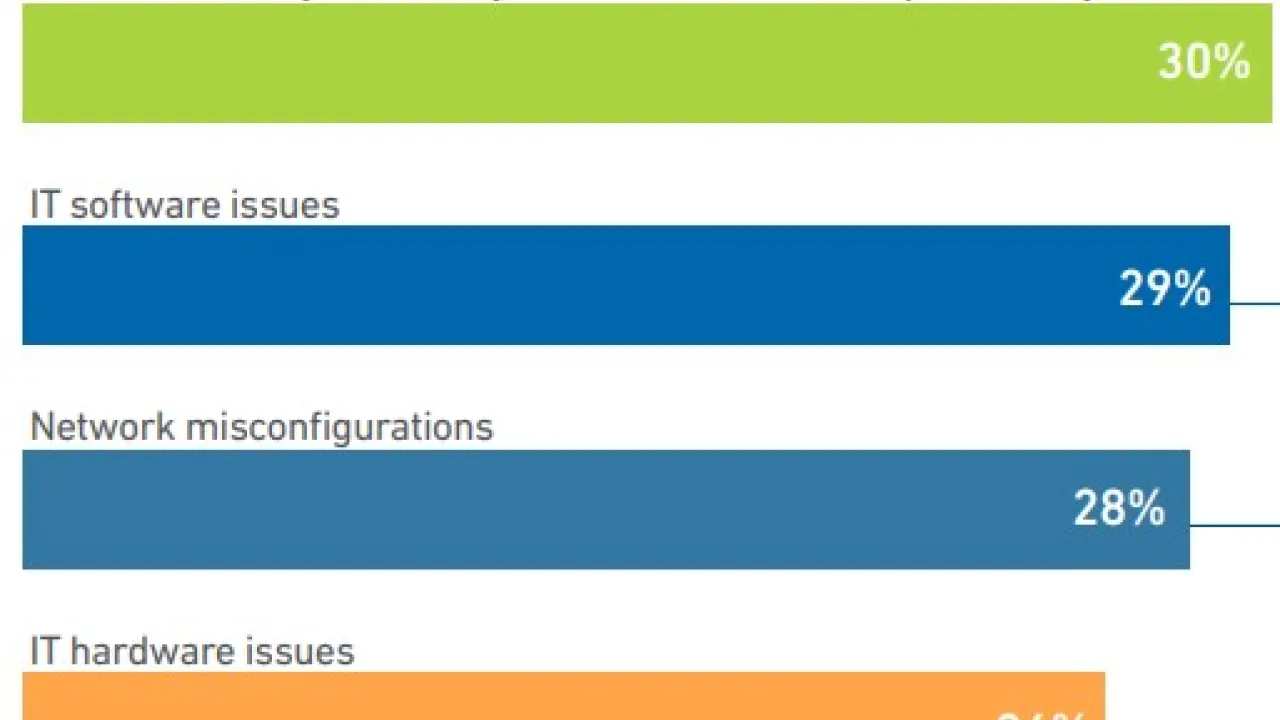

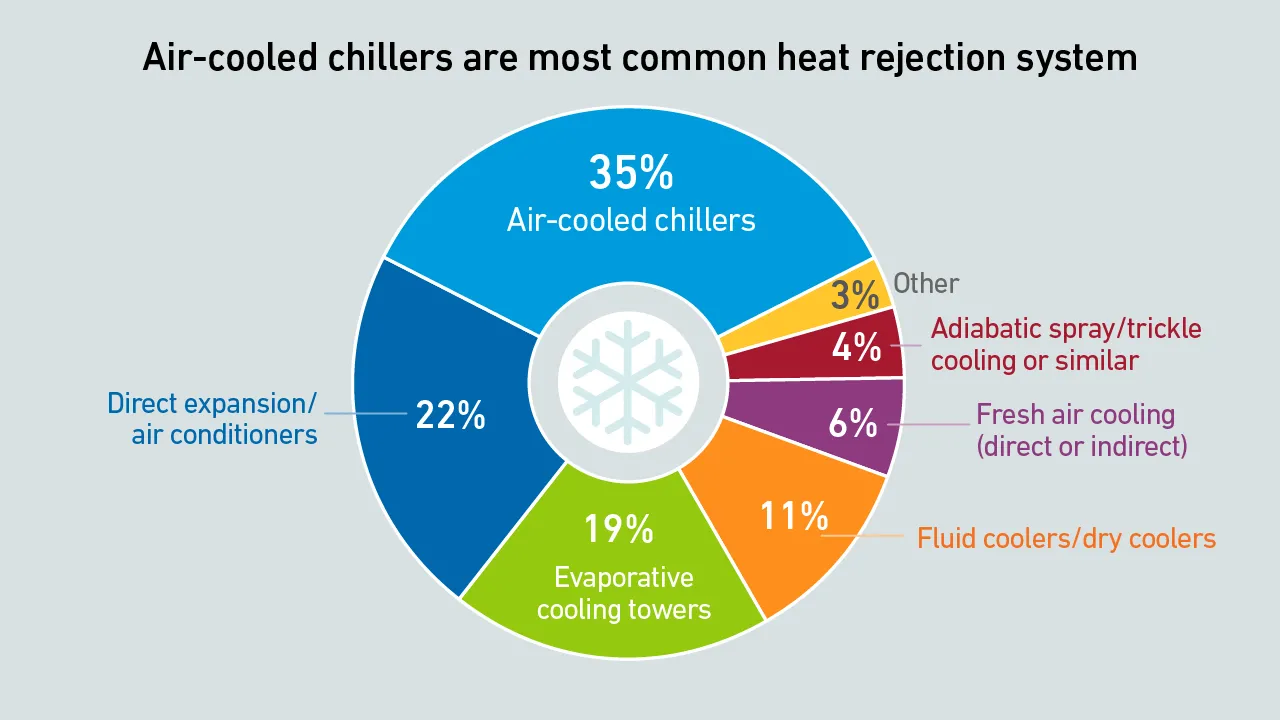

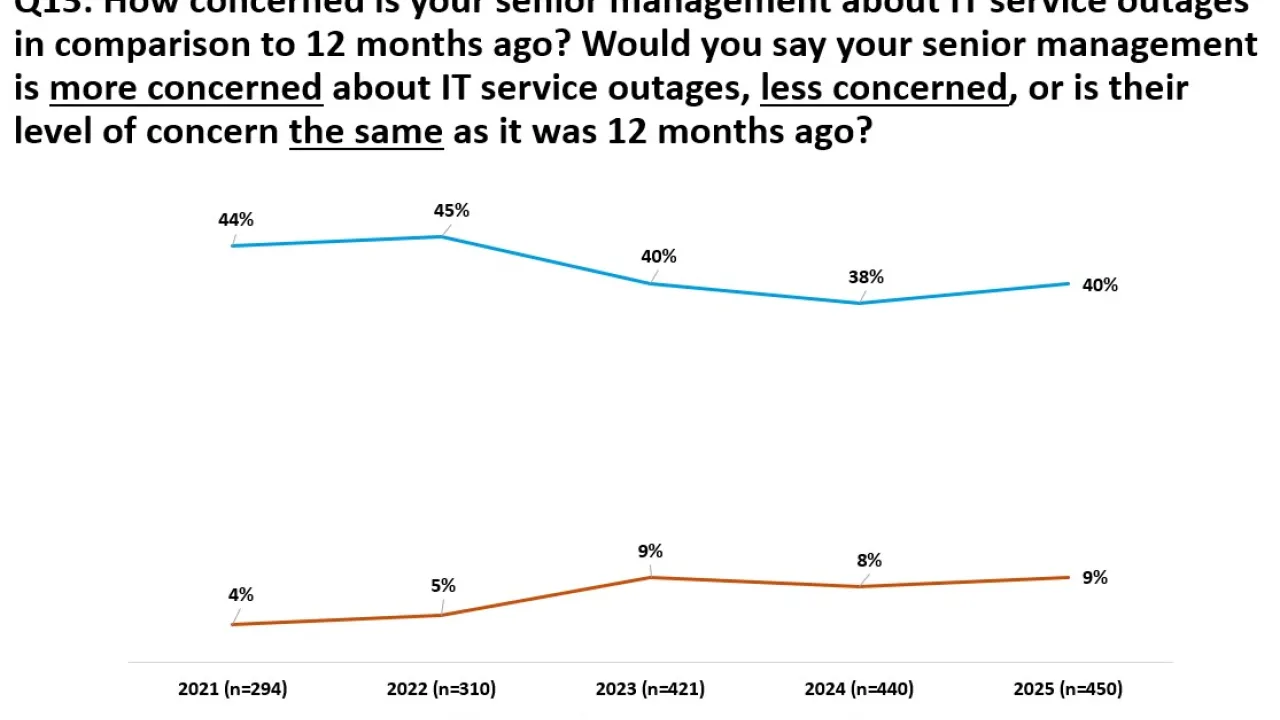

Results from Uptime Institute's 2025 Data Center Resiliency Survey (n=970) focus on data center resiliency issues and the impact of outages on the data center sector globally.The attached data files below provide full results of the survey,…

High-end AI systems receive the bulk of the industry's attention, but organizations looking for the best training infrastructure implementation have choices. Getting it right, however, may take a concerted effort.

AI is not a uniform workload - the infrastructure requirements for a particular model depend on a multitude of factors. Systems and silicon designers envision at least three approaches to developing and delivering AI.

Agentic AI offers enormous potential to the data center industry over the next decade. But are the benefits worth the inevitable risks?

The emergence of the Chinese DeepSeek LLM has raised many questions. In this analysis, Uptime Intelligence considers some of the implications for all those primarily concerned with the deployment of AI infrastructure.

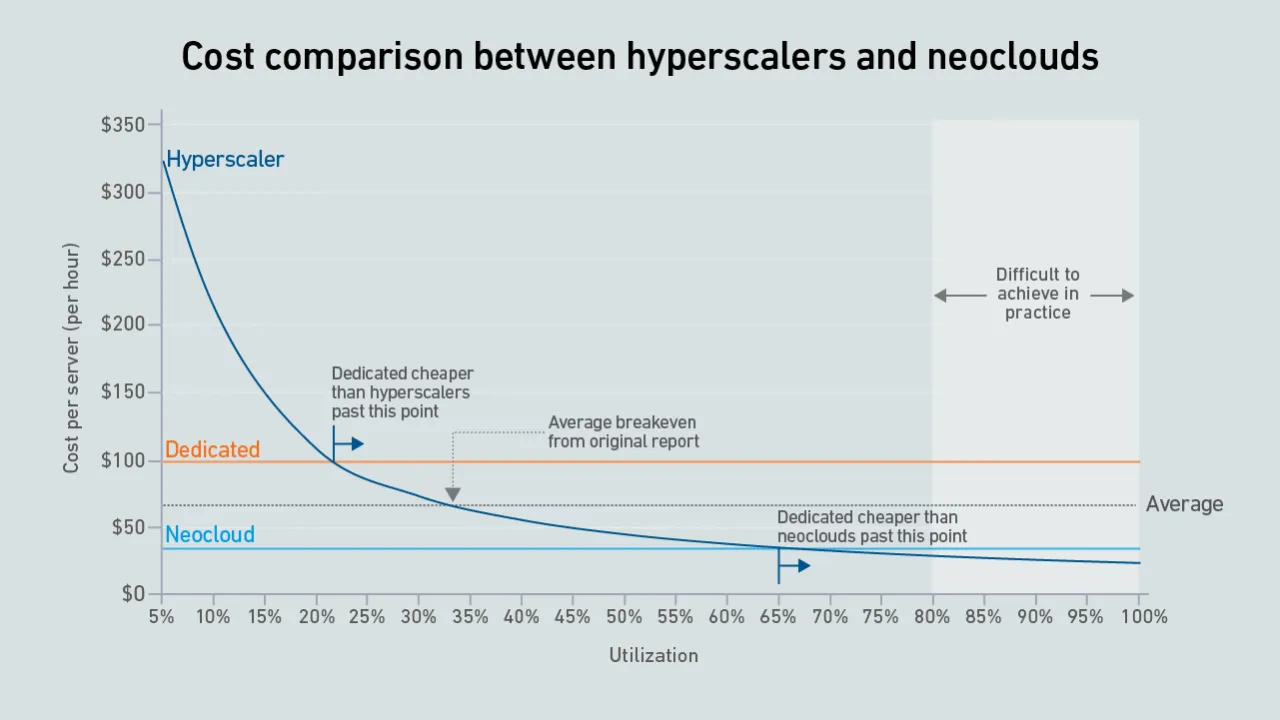

A new wave of GPU-focused cloud providers is offering high-end hardware at prices lower than those charged by hyperscalers. Dedicated infrastructure needs to be highly utilized to outperform these neoclouds on cost.

The US government is applying a new set of rules to control the building of large AI clusters around the world. The application of these rules will be complex.

Max Smolaks

Max Smolaks

Andy Lawrence

Andy Lawrence

Jacqueline Davis

Jacqueline Davis

Paul Carton

Paul Carton

Anthony Sbarra

Anthony Sbarra

Laurie Williams

Laurie Williams

Rose Weinschenk

Rose Weinschenk

Daniel Bizo

Daniel Bizo

John O'Brien

John O'Brien

Dr. Owen Rogers

Dr. Owen Rogers

Peter Judge

Peter Judge