DLC was developed to handle high heat loads from densified IT. True mainstream DLC adoption remains elusive; it still awaits design refinements to address outstanding operational issues for mission-critical applications.

filters

Explore All Topics

European national grid operators are advised to adopt proposed grid code requirements to protect their infrastructure from risks, such as data center activity, even though Commission action on the issue has stalled.

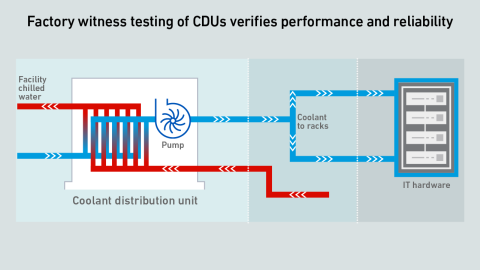

DLC introduces challenges at all levels of data center commissioning. Some end users accept CDUs without factory witness testing — a significant departure from the conventional commissioning script

Uptime Intelligence’s predictions for 2025 are revisited and reassessed with the benefit of hindsight.

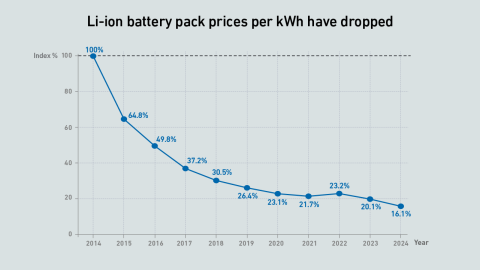

Meeting the stringent technical and commercial standards for UPS energy storage applications takes time and investment — during which Li-ion technology keeps evolving. With Natron gone, will ZincFive be able to take the opportunity?

A bout of consolidation and investment activity in cooling systems in the past 12 months reflects widespread expectation of a continued spending surge on data center infrastructure.

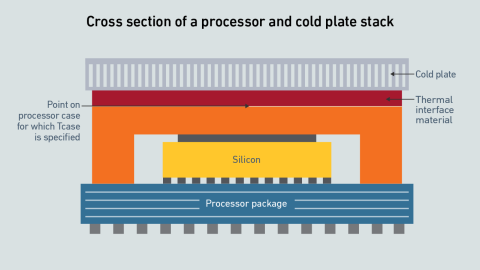

Performant cooling requires a full-system approach to eliminate thermal bottlenecks. Extreme silicon TDPs and highly efficient cooling do not have to be mutually exclusive if the data center and chip vendors work together.

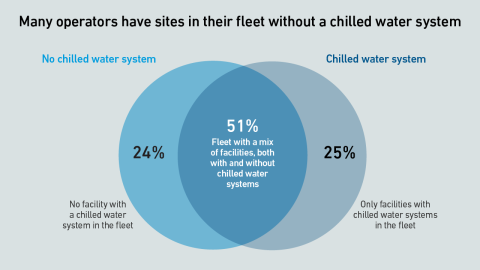

Currently, the most straightforward way to support DLC loads in many data centers is to use existing air-cooling infrastructure combined with air-cooled CDUs.

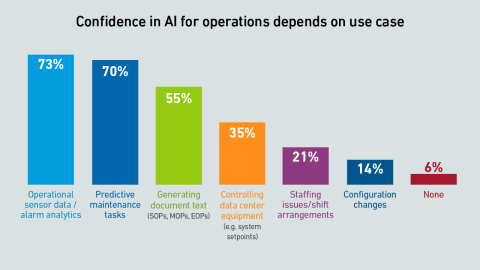

Most operators do not trust AI-based systems to control equipment in the data center - this has implications for software products that are already available, as well as those in development.

In Northern Virginia and Ireland, simultaneous responses by data centers to fluctuations on the grid have come close to causing a blackout. Transmission system operators are responding with new requirements on large demand loads.

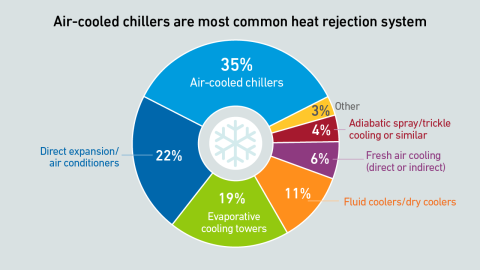

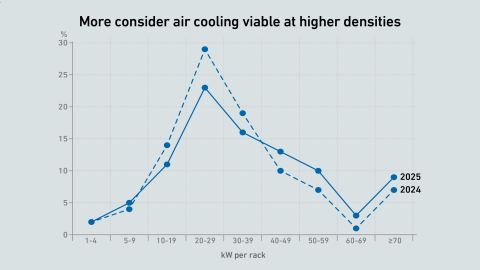

Against a backdrop of higher densities and the push toward liquid cooling, air remains the dominant choice for cooling IT hardware. As long as air cooling works, many see no reason to change - and more think it is viable at high densities.

Real-time computational fluid dynamics (CFD) analysis is gradually nearing reality, with GPUs now capable of producing high-fidelity simulations in under 10 minutes. However, many operators may be skeptical about why this is necessary.

Direct liquid cooling adoption remains slow, but rising rack densities and the cost of maintaining air cooling systems may drive change. Barriers to integration include a lack of industry standards and concerns about potential system failures.

The data center industry is on the cusp of the hyperscale AI supercomputing era, where systems will be more powerful and denser than the cutting-edge exascale systems of today. But will this transformation really materialize?

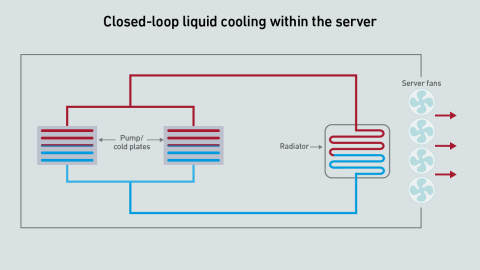

Liquid cooling contained within the server chassis lets operators cool high-density hardware without modifying existing infrastructure. However, this type of cooling has limitations in terms of performance and energy efficiency.

Jacqueline Davis

Jacqueline Davis

Peter Judge

Peter Judge

Andy Lawrence

Andy Lawrence

Daniel Bizo

Daniel Bizo

Max Smolaks

Max Smolaks

John O'Brien

John O'Brien

Rose Weinschenk

Rose Weinschenk