The US government's AI compute diffusion rules, introduced in January 2025, will be rescinded - with new rules coming. It warns any dealings linked to advanced Chinese chips will require US export authorization. Operators still face tough demands.

filters

Explore All Topics

Data center owners are as committed to Tier III and Tier IV designs as ever, but more will be required to share power, adding complexity and possibly risk.

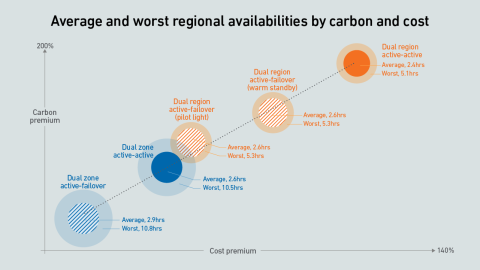

Organizations that architect resiliency into their cloud applications should expect a sharp rise in carbon emissions and costs. Some architectures provide a better compromise on cost, carbon and availability than others.

In the US, the politicization of data center development is underway, driven by its impact on power prices. As state governments seek ways to protect consumers, operators will need to engage in the policy debate.

Many operators expect GPUs to be highly utilized, but examples of real-world deployments paint a different picture. Why are expensive compute resources being wasted - and what effect does this have on data center power consumption?

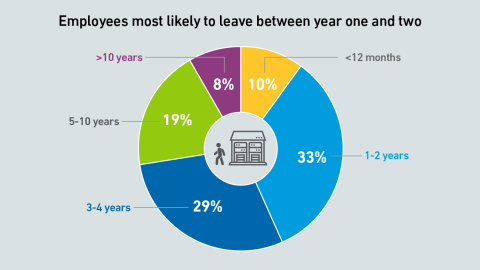

Data center owners and operators find it challenging to retain qualified staff. Research-backed strategies can provide solutions for building stronger mentorship programs and improved staff retention.

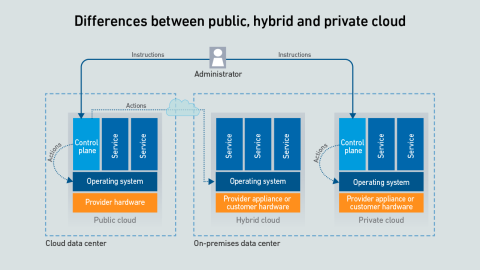

Hyperscalers offer a confusing array of on-premises versions of their public cloud-enabling infrastructure - the differences between them are rooted in whether the customer or the provider manages the control plane and server hardware.

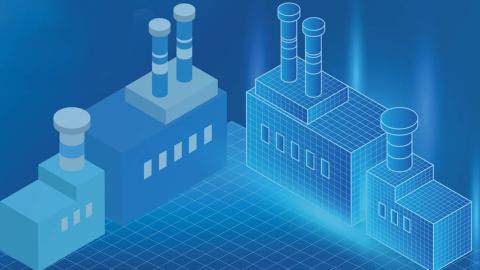

Digital twins are increasingly valued in complex data center applications, such as designing and managing facilities for AI infrastructure. Digitally testing and simulating scenarios can reduce risk and cost, but many challenges remain.

Under the EU's Digital Operational Resilience Act (DORA), data centers designated as "critical" for their role in financial services will face direct regulatory oversight and new resiliency requirements.

Cloud providers live and die based on trust - customers rely on them to run workloads effectively, offer scalable capacity, sustain prices and keep data confidential. But recent geopolitical instability threatens to undermine that trust.

The global tariff crisis initiated by the US administration is expected to have strong, long-lasting effects on the data center sector, driving up prices and slowing growth.

While AI infrastructure build-out may focus on performance today, over time data center operators will need to address efficiency and sustainability concerns.

AI vendors claim that "reasoning" can improve the accuracy and quality of the responses generated by LLMs, but this comes at a high cost. What does this mean for digital infrastructure?

Data center builders who need power must navigate changing rules, unpredictable demands - and be prepared to trade.

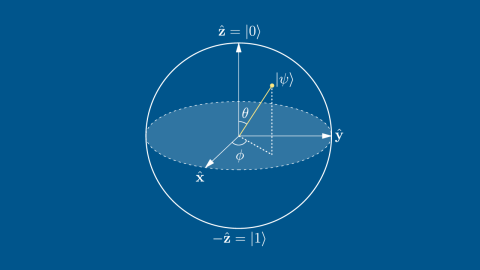

Quantum computing progress is slow; press releases often fail to convey the work required to make practical quantum computers a reality. Data center operators do not need to worry about quantum computing right now.

Daniel Bizo

Daniel Bizo

Andy Lawrence

Andy Lawrence

Dr. Owen Rogers

Dr. Owen Rogers

Peter Judge

Peter Judge

Max Smolaks

Max Smolaks

Rose Weinschenk

Rose Weinschenk

John O'Brien

John O'Brien

Douglas Donnellan

Douglas Donnellan

Seb Shehadi

Seb Shehadi

Dr. Tomas Rahkonen

Dr. Tomas Rahkonen