UII UPDATE 217 | Q4 2023

Intelligence Update

"Hyperscale colocation": the emergence of gigawatt campuses

Massive new “gigawatt” campuses and mega data centers are being proposed by some builders, investors and operators as a solution to the anticipated rocketing demand for compute and storage. These campuses are known as “gigawatt” campuses or "hyperscale colocation" — although some will include cloud owned and operated data centers. They may not reach gigawatt scale, but their planned power provision is upwards of 500 megawatts (MW).

Uptime has identified proposals (or plans) for 26 of these mega data centers since 2021 (see Proposed gigawatt campuses). If all these projects were built to planned capacity (i.e., more than 10,000 MW) and ran at half of their projected capacity, they would account for approximately 45 terawatt-hours (TWh) of energy use each year. Energy use globally by data centers has been estimated at between 200 TWh and 400 TWh.

Context

The 26 mega data center projects identified by Uptime since 2021 will provide a huge boost in worldwide data center capacity but will also significantly increase local and global data center power consumption.

However, there are elements of hype and many challenges to overcome before any of these data centers are built. Financing, connectivity, guaranteed demand, low-carbon power and planning permissions will all need to be in place. Some will likely never be built or reach capacity — but some certainly will.

The gigawatt campus

The main aim of these gigawatt campuses is to provide capacity to hyperscaler customers looking to meet surging demand. However, they will also support enterprises that want to own and operate more of their own digital infrastructure rather than rely on public cloud services.

Based on published plans so far, a gigawatt data center campus will be made up of hyperscaler-size facilities that are physically colocated on the same campus, typically covering millions of square meters. Expensive infrastructure — such as high-bandwidth fiber, subsea landing stations, electricity substations and renewable energy generation and storage — can be shared by multiple tenants.

The cost involved in developing these campuses is huge. One of the very largest — the Prince William Digital Gateway in Virginia (US) that is being developed by Compass Datacenters and QTS Data Centers — will cost around $30 billion over the next 10 years.

Investment on this scale favors a consortium model, which may include stakeholders from across the data center ecosystem. And here, hyperscalers have a key role to play: their involvement both drives and guarantees demand, as well as providing credibility, financing, connectivity and expertise.

The projects that have been planned so far suggest that in most cases, there will be one or two lead operators, wholesale colocation companies or hyperscalers, with many small operators also expected to take capacity. This will ultimately lead to the emergence of new data center clusters that, in part, resemble the existing clusters in Tier 1 markets today.

The gigawatt campuses will feature some of the following characteristics:

- Eventual buildout capacity of more than 500 MW, with resources and space to expand and scale toward 1 gigawatt (GW).

- Supported by redundant and often new high-bandwidth fiber to major centers.

- Located on large, brownfield or redundant sites with minimal environmental impact.

Designed to enable operators to meet low-carbon or carbon-free energy objectives, the project may involve huge power purchase agreements, colocation with power plants and significant use of on-site renewable energy sources. This may include solar, wind, geothermal and even nuclear energy, as well as the use of microgrids to manage on-site / off-site resources.

Data center operators on the site will have the opportunity to build at scale, extending across multiple data halls. Some of the characteristics include:

- Be highly efficient, with a power usage effectiveness (PUE) of below 1.2 to optimize energy consumption.

- Modular design of internal and external spaces to enable rapid reconfiguration. Use of regular repeatable designs, materials and installation approaches.

- Use of artificial intelligence (AI)-based management systems to optimize monitoring, performance and availability of data center assets and infrastructure.

- Presence in other gigawatt campuses around the world, enabling repeatability of designs and extensive use of software to design and operate.

- Support for high densities, high-performance servers and GPUs to run AI models, which are likely to be drivers of demand. This will likely involve liquid cooling options.

Proposed gigawatt campuses

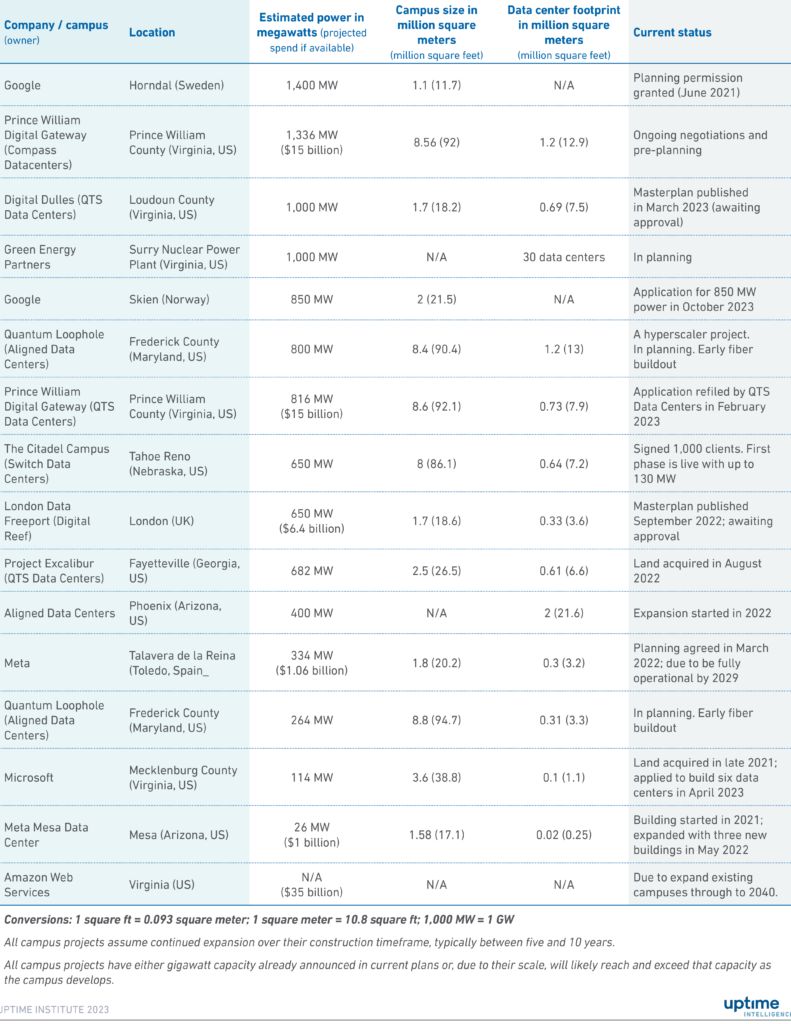

Uptime Institute has tracked a total of 26 projects that have been announced since 2021, of which 19 were listed in 2022 and 2023. Those projects that have detailed plans available range from 100 MW at the lowest end through to more than 1 GW — 10 campuses have plans for more than 500 MW capacity. The campuses are located in the US and Europe, the Middle East and Africa, so other developments in China, India or Asia Pacific have not been included (details of these will be published in a future report). Table 1 lists the largest deals that have been identified by Uptime.

Table 1. Select mega data center and gigawatt campuses projects in US and Europe

Impact on the data center sector

Uptime Intelligence has identified four areas where the building and emergence of these gigawatt sites will have an impact on the sector, both in the near and short term:

- The data center map. As subsea and long-distance fiber are laid and new clusters of data centers are built, the gravity of the industry — which pulls demand toward the sites that have reliable access to internet exchanges and cloud services — will shift. New builds will be attracted as much to the new (and second-tier) clusters as to the current first-tier hotspots. This will likely bring down the cost of colocation, cloud and connectivity.

- Supply chain. Gigawatt campus sites will not only push up demand for all equipment, which is already high, but they will also enable operators to build at scale, encouraging automation and investment. While all the demand is not necessarily new, it will come in waves and create competitive hotspots for skills, equipment and services. With many operators buying at scale, the supply chain will need to develop to meet this increased demand — which will likely favor larger units and be more innovative than many of the smaller operators’ needs.

- Sustainability. Given the demands of both regulators and the public, the designers and operators of the new campuses need to ensure, as far as possible, that the sites are highly efficient and powered by carbon-free energy. The scale of the new developments makes this challenging. In most cases, the site has been selected to take advantage of a low-carbon energy source. But much greater innovation can also be expected. The huge and reliable demand from IT will make large-scale innovation and investment possible. These sites will be centers for microgrids, the use of long-duration batteries, experiments and on-site renewable energy generation.

- Resiliency. The concentration of facilities in clusters and hotspots around the world has been worrying regulators and enterprises alike. The extended loss of power at a big Tier 1 data center location could cause major disruption and even economic damage. The new gigawatt campuses (and associated fiber) outside of these centers, along with the development of new hubs in smaller cities, will likely provide more resiliency and diversity across national and international digital infrastructure.

The Uptime Intelligence View

Led by both hyperscaler operators and colocation companies, internet connectivity — and the global map of digital infrastructure — is being extended and reengineered. A new overlay of gigawatt campuses, heavily connected by wide-bandwidth fiber, is beginning to emerge alongside the traditional data center hotspots. This will be evolutionary, not revolutionary, and is designed to meet runaway demand as well as improve sustainability, resiliency and changing workloads.